Once upon a time, there was a hill climber.

The hill climber wanted to climb the tallest hill. However, the land was foggy and they couldn't see far. So, they climbed in whatever nearby direction seemed uphill.

They climbed & climbed, and finally reached a peak. “At last,” the hill climber said, “I've climbed the tallest hill!”

What our protagonist has done is known as the "Hill Climbing" algorithm. Given a point on a landscape of possibilities – where higher points represent better states – the algorithm just looks at nearby points (because evaluating all points would be too costly), moves to the "highest" nearby point, and repeats. Eventually, the algorithm will reach a peak.

Just one problem.

That peak may not be the peak.

Our hero is now stuck in what's called a "local maximum".

The hill climber looked to the left. Doesn't seem uphill.

The hill climber looked to the right. Doesn't seem uphill.

The hill climber felt trapped. “Is this all there is?” they thought. “Is life literally all downhill from here?”

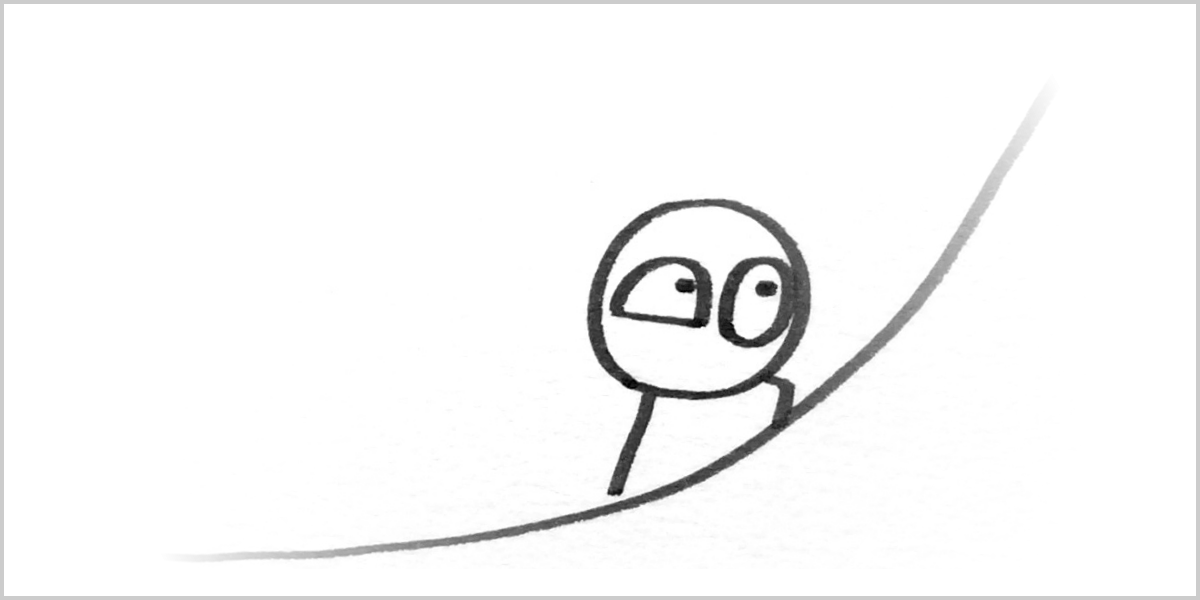

Little did they know, there are better algorithms. Algorithms like “Stochastic Hill Climbing” and “Simulated Annealing” solve the local-maximum problem by adding randomness. This causes the algorithm to take worse steps in the short-run, so it can locate better states in the long-run.

Sometimes, you have to leave what's been working for you, and take a step down into the fog...

...to climb to a better place.