Wow, I haven't shipped anything in a year and a half, huh? Not even a blog post?

Sure, I've generated lots of ideas, at different stages of development, but in the end, all that matters is what's out there. So this month, I'm shipping this blog post about all the projects I haven't shipped (yet).

Motivations for this post:

- To scratch an itch.

- To give y'all a look into the "creative" "process".

- To say "First!!1!", in case someone else beats me to an idea. This is petty, and all my work is Creative Commons anyway, but... well, heart wants what the heart wants. And the heart wants to be petty.

- To get feedback early & often. Which projects resonate most with you? What would you personally benefit from? This may help me prioritize what to make, or generate new ideas!

This post contains 47 "project pitches", with the following info:

- The working title

- A ~2 minute pitch

- How much progress made.

- How long it's been on the backburner.

- How much time to finish it. (VERY CRAPPY ESTIMATES)

- How much I want to finish it. (So you can set your expectations for if I ever ship it, or figure out if you'd like to beat me to it.)

YOU CAN READ ANY OF THE PROJECT PITCHES IN ANY ORDER, OR SKIP THEM. Holy moly this full post is over an hour long. Each pitch is only a 1 to 5 minute read.

So, without further self-deprecation, here's 47 Things I Have Not Shipped But Might Eventually, Maybe:

Table of Contents

Categorized by interest/topic, but can be read (or skipped) in any order!

If you're on a desktop browser, you can click this icon on the right to access the Table of Contents at any time: →

Click ↪ to skip to that project, if it tickles your fancy:

- Why all the trans furry programmers? ↪

- Backwards Logic: logic gates that go forwards & backwards & sideways ↪

- The Quantum Octopus ↪

- Beating Goodhart's Law with CLAP: composite limited-award proxies ↪

- Bio-plausible neural networks ↪

- Automatically getting causation from correlation ↪

- Smooth Criminal: exploring the continuous prisoner's dilemma ↪

- AI Safety for Fleshy Humans ↪

- You Played Yourself: the game theory of self-modification ↪

- Will self-improving AI take off fast or slow? ↪

- What if System 2 just is System 1? ↪

- Math for people who have been scarred for life by math: or, Algebra in Pictures ↪

- Why don't brackets matter in multiplication? (novel visual proof!) ↪

- ...999.999... = 0 (a math shitpost) ↪

- Gödel's Proof, fully explained from scratch ↪

- "This sentence is false" is half-true, half-false: Fuzzy Logic vs Paradoxes ↪

- Your choosing-algorithm should choose itself: intro to Functional Decision Theory ↪

🔔 Explaining Science & Statistics

- Correlation is evidence of causation, & other Bayesian fun facts ↪

- Statistics without the Sadistics ↪

- Causal Networks tutorial ↪

- Systems Biology tutorial ↪

- Emotions in 3D: The Valence-Arousal-Dominance Model ↪

🧐 Personal Reflections / Philosophy

- Emotions are for motions: Notes on using feelings well ↪

- You don't need faith in humanity for a moral or meaningful life ↪

- You don't want assholes on your side ↪

- Virtue Ethics for Queer Atheist Nerds ↪

- If classic computers can be conscious, then dust is conscious ↪

- On non-reproductive, similar-in-age, consensual-adult incest ↪

👁🗨 Stories

- The Lolita Meets Clockwork Orange Story ↪

- Complex Jane: a love story with an imaginary friend ↪

- I've Become My Mother: a sci-fi story about raising yourself ↪

- Genetically Engineered Catgirl ↪

Turn on your speakers, there's audio of my amateur singing in this one! 🔊

- The Special Relativity Song ↪

- The General Relativity Song ↪

- The Intrusive Thoughts Song ↪

- The Pythagorean Theorem Song ↪

- The Longest Term ↪

- The 3Blue1Brown Jingle ↪

- Brand New Fetish ↪

🤷🏻♀️ Miscellaneous

- Trans voice tools/tutorials ↪

- Tool for making drip-feed courses (& courses I want to make) ↪

- (Something on Georgist Economics) ↪

- (Something on Pandemic Preparedness) ↪

- The Exit Strategy ↪

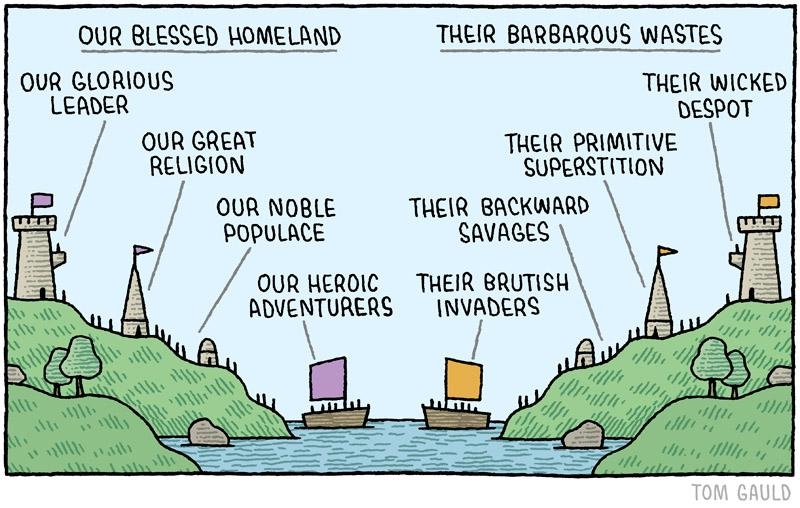

- We do advocacy, they do propaganda: A Collection of Russell Conjugations ↪

- Common Wisdom is a Two-Timing Sumnabitch ↪

- Clearing out the idea closet (47 projects on my backlog) ↪

🤔 Research Projects

After a decade of explaining science, I'd like to finally try doing science. (well, and math research, if you count math as "science")

Again, just dumping it all here to pettily claim "First!!1!":

- Why all the trans furry programmers? ↪

- Backwards Logic: logic gates that go forwards & backwards & sideways ↪

- The Quantum Octopus ↪

- Beating Goodhart's Law with CLAP: composite limited-award proxies ↪

- Bio-plausible neural networks ↪

- Automatically getting causation from correlation ↪

- Smooth Criminal: exploring the continuous prisoner's dilemma ↪

. . .

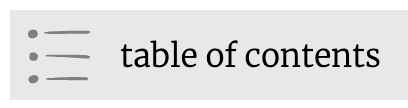

1. 😽 Why all the trans furry programmers?

Let's start with a goofy research project idea!

Context: I'm a trans woman, a member of the furry fandom, and a programmer. There is a very, very widely-recognized correlation amongst "My People":

(from this popular reddit post)

(from this popular reddit post)

(Comic by AntisocialRavens, source! Edit Aug 31 2024: thank you to Nathan W & Raspberry Floof for independently finding this source, sorry it took me half a year to finally update this blog post.)

(Comic by AntisocialRavens, source! Edit Aug 31 2024: thank you to Nathan W & Raspberry Floof for independently finding this source, sorry it took me half a year to finally update this blog post.)

But does anecdote match data? If this correlation is real, what causes it? And roughly how many trans furry programmers are there in America?

Progress: 60% ▶️▶️▶️◻️◻️

- I've collected the stats and confirmed the pairwise correlations are indeed real! Trans folks are ~2x as likely to be Programmers & vice versa; Furries are ~10x as likely to be Programmers & vice versa; Trans folks are ~20x as likely to be Furries & vice versa.

- Another interesting finding: in America, there are roughly as many trans adults as computer programmers (~1.6 million).

- Doing a Fermi estimate (Fur-mi estimate?) using

[ratio of furries who report going to conventions]&[number of attendees at the top furry conventions in the U.S.], I estimate there's probably[100,000 to 300,000]furries in the US. - I've done a dozen informal interviews with folks who are 2-of-3-of-{trans, furry, programmer}, and consolidated their thoughts.

- I've investigated causal hypotheses, to explain the correlations between {trans, furry, programmer}. (Spoiler: It's probably autism spectrum.)

What's left to do:

- Polish the write-up

- Make a Monte Carlo sim to get error bars & sensitivity to assumptions

- Post a survey in trans programmer subreddits/Discords to ask how many of them also happen to furries, to estimate P(furry | trans & programmer), which will let me calculate... how many trans furry programmers are there in America?

Time on backburner: 1 year

Time to finish: 3 weeks

Desire to finish: 4/5 ⭐️⭐️⭐️⭐️☆ (It's cute & close to done anyway)

. . .

Speaking of "probably autism spectrum"...

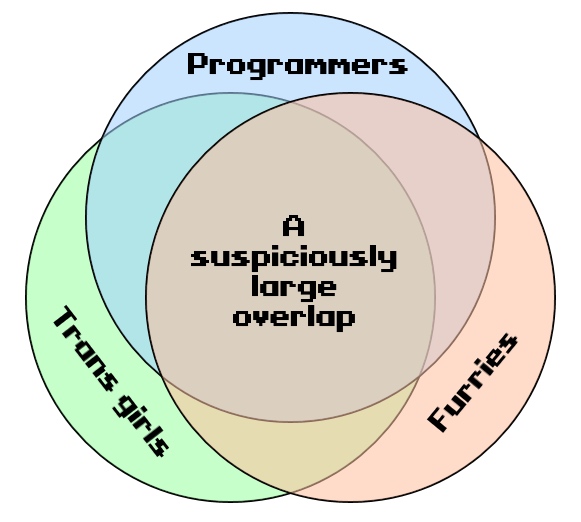

2. ↔️ Backwards Logic: logic gates that go forwards & backwards & sideways

(I'm sorry the following is very jargon-heavy. But, just clearing out my idea closet....)

During an isolating 2020 Canadian-winter pandemic lockdown, I went Full Crackpot. I spent four months obsessed with (what I thought to be) a promising way to crack the million-dollar P = NP problem.[1]

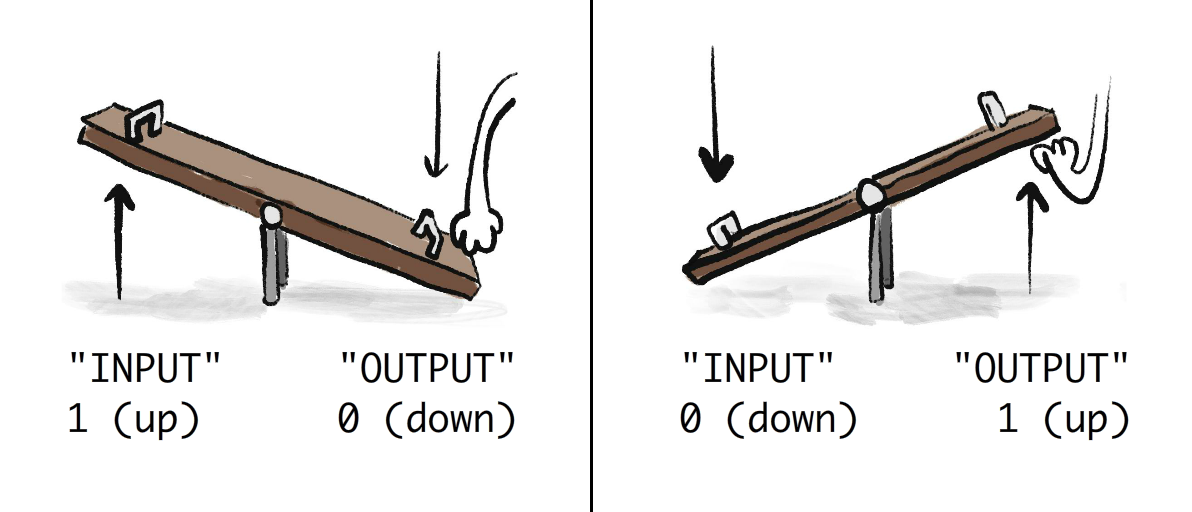

The inspiration: A see-saw is a NOT logic gate. Left side down, right side up. Left side up, right side down.

The important bit: you can do this in reverse. Move the right side up, and the left side goes down.

By manipulating the output, you can get the necessary input.

So I wondered, was there a way to:

- Build "bi-directional" NOT/AND/OR gates like this,

- Then use them to build a circuit that multiplies two binary numbers

- Then set the output of that circuit to some multiple of two primes

- To force the circuit to automatically give you the two primes as input?

- And, more generally, could this solve any Circuit-SAT problem (an NP-Complete problem)?

(A nuance about bi-directional OR gates; if you set the output to 1, it "wouldn't know" whether the inputs should be (0,0), (0,1), or (1,0). But it can know it's not (1,1). The hope was that a mechanical circuit [or simulation of it] would constraint-solve that correctly anyway. Same nuance with AND gates.)

Here's a simulation of a fuzzy+reversible Exclusive OR (XOR) circuit. When I pull the output to 1, it constraint-solves the inputs to be 0 & 1, in either order. When I pull the output to 0, it constraint-solves the inputs to be both 0 or both 1.

(After independently discovering this idea, I checked to see if it was done before. Of course it was, there's "nothing new under the sun". But, as far as I could find, there was only one paper in 2002 that did something similar, with water-based logic gates.[2] Conceptual, no experiments/simulations done. I couldn't find any "disowning" or "debunking" of their idea, so I guess I just had to try it and see if/how it failed.)

My simulation was messing up for larger cases, and building it for real would take forever. So eventually, all the above led to the following "simpler" algorithm:

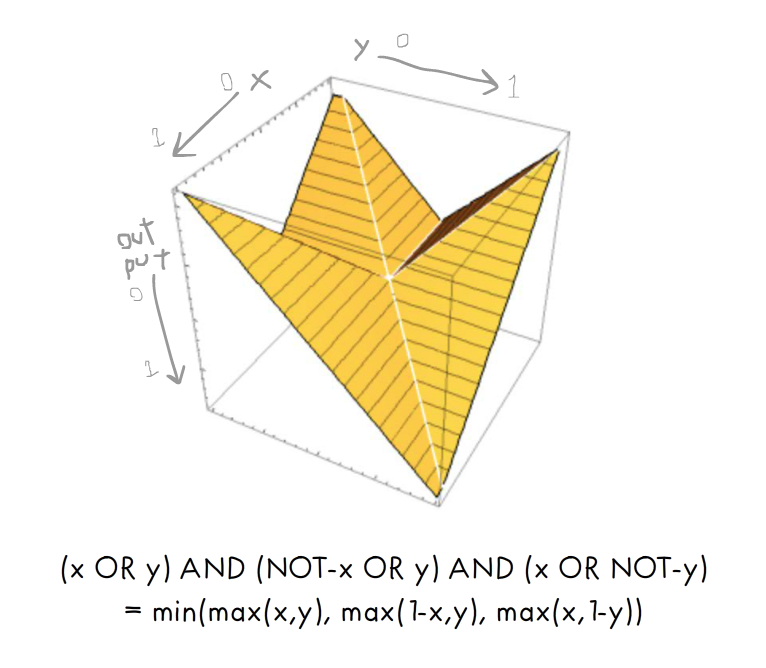

- Take a Circuit-SAT problem.

- Replace each logic gate with its fuzzy-logic equivalent: [3] \(NOT(x) = 1-x; \space \space AND(x,y)=min(x,y); \space \space OR(x,y)=max(x,y)\)

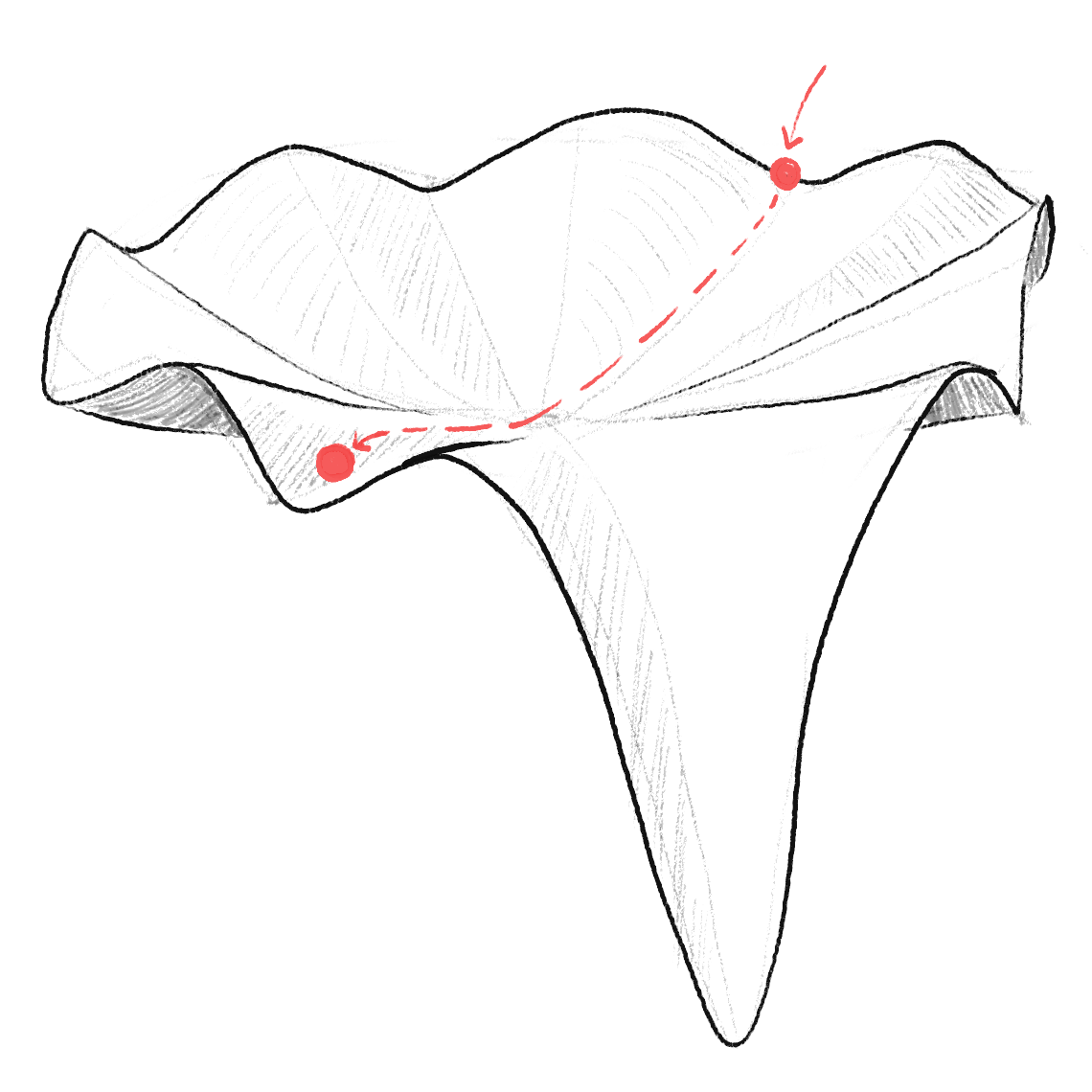

- This gives you a function \([0,1]^n \rightarrow [0,1]\) that you can interpret as the "energy landscape" of this mechanical circuit, if \(output = 1\) is "down". For example:

- As you can see above, there are lots of zero-gradient "ridges", but you can get rid of them by adding a small "centripetal force" towards the center, where all inputs are 0.5.

- And voilà, you have an energy landscape where all local minima are global minima, and they're all valid solutions! There's no flat/vanishing gradients, and there's only one saddlepoint, which we know from Deep Learning can be efficiently solved with stochastic gradient descent + momentum! So, I was hoping, that'd be enough to work!...

Alas, it's the most pathological saddlepoint I've ever met, so no dice.

Project pitch: Explain what I found anyway, to save some other sucker four months of work if they discover the same idea. Reversible & mechanical logic gates may also be helpful for nanobots or something, I dunno.

Progress: 80% ▶️▶️▶️▶️◻️

I got pretty far, as you can see above! "All that's left to do" is polish it up, try a few more ideas, then post it as a writeup.

Time on backburner: 4 years

Time to finish: 3 weeks

Desire to finish: 3/5 ⭐️⭐️⭐️☆☆

. . .

3. 🐙 The Quantum Octopus

Okay, let's go a bit more crack-potty: maybe the above problem could be solved with quantum physics?

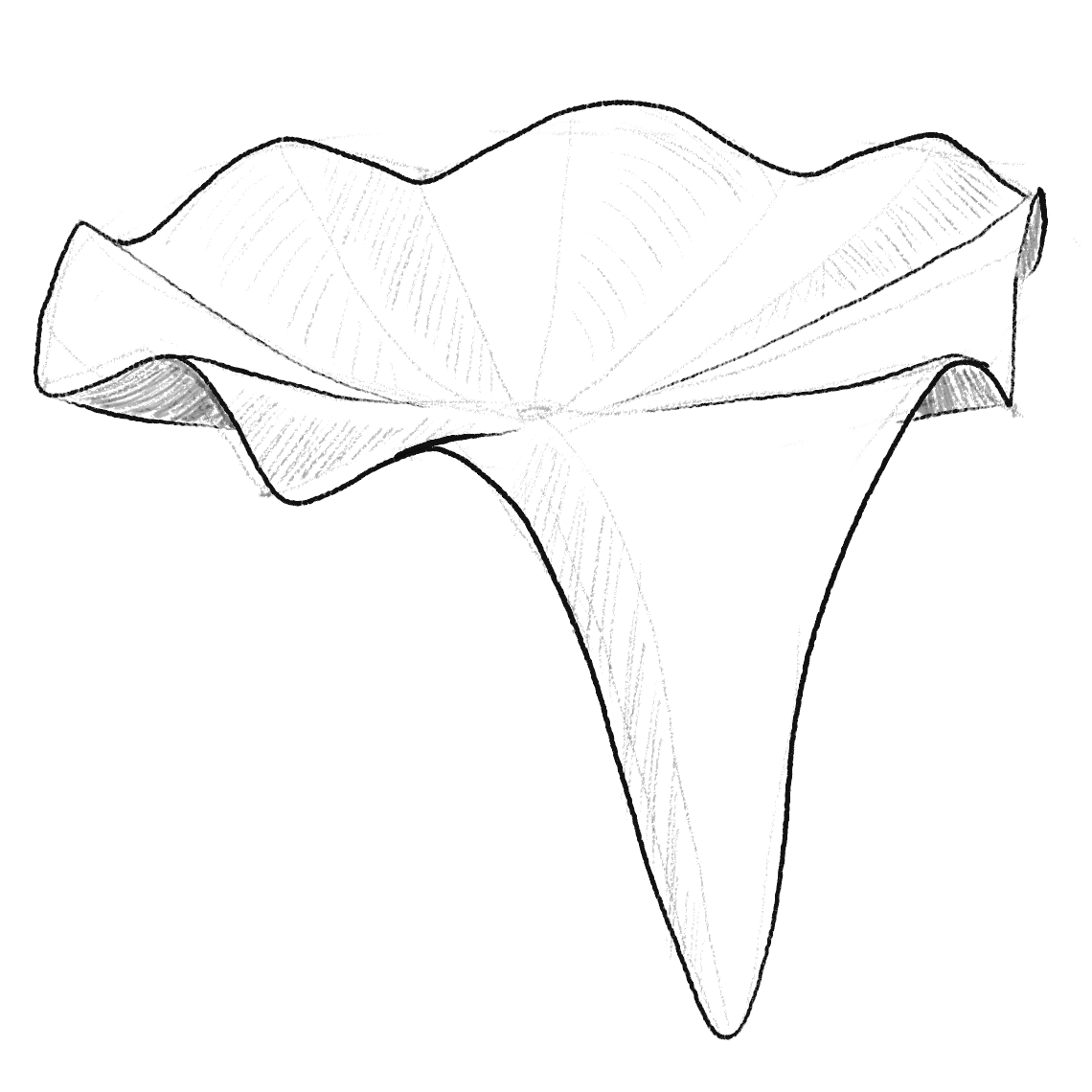

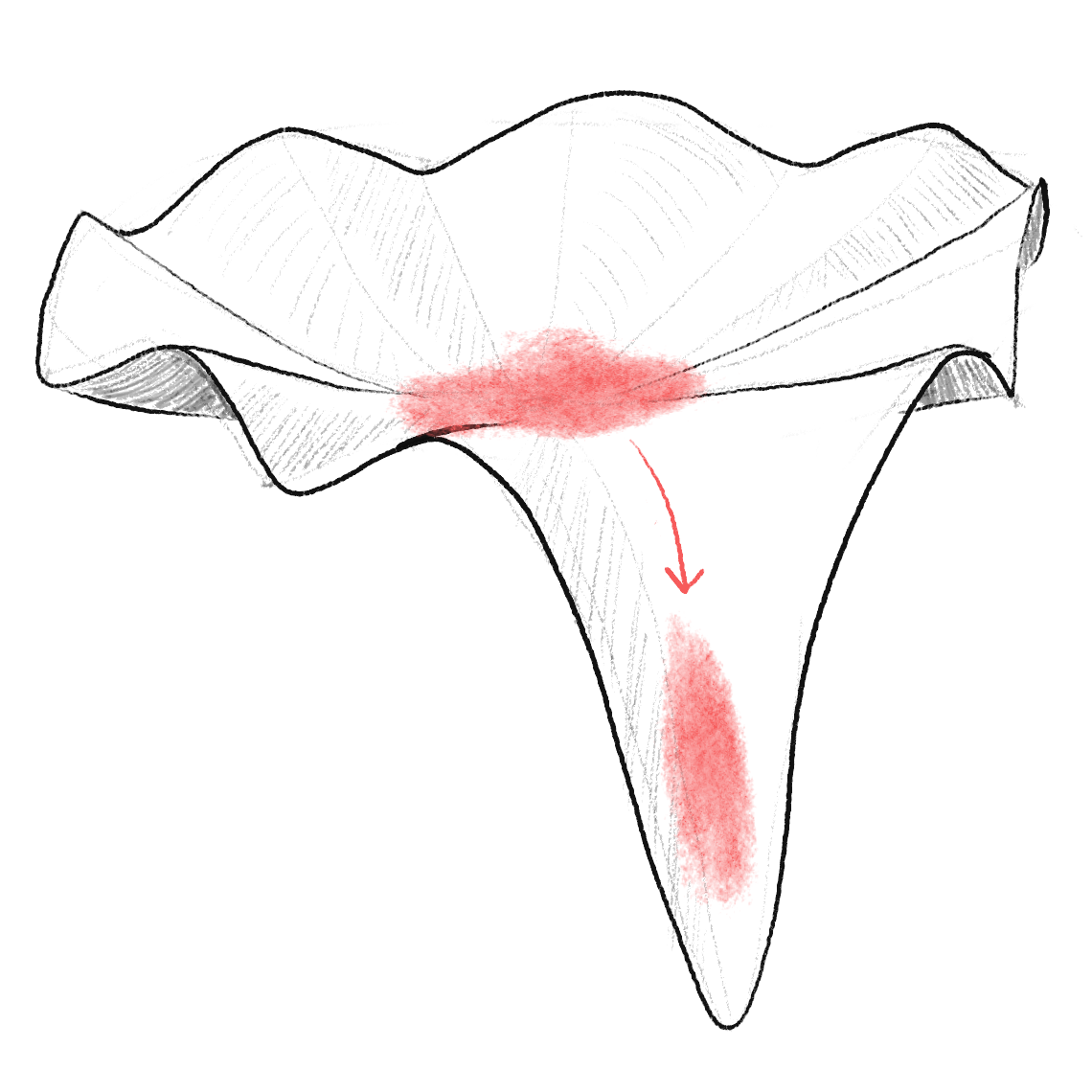

Above, I transformed the NP-Complete Circuit-SAT problem into a gradient descent problem, where all local minima are global minima, and there's only one saddlepoint. Unfortunately, that saddlepoint was very pathological. Apparently, this kind of saddlepoint is called an "octopus saddle"[4].

(Though, since it's in high n-dimensions, maybe it's a Cthulhu saddle?)

Good ol' stochastic gradient descent, even with momentum, can't solve it efficiently since it just brute-forces each "arm" of the octopus:

So I wondered: could a quantum wavefunction, because its position is "fuzzy", and thus "feels out" all the arms... and thus pulled by all nearby slopes... could that efficiently find its way down an octopus saddle?

(NOTE: I know quantum computers DO NOT solve problems by just "trying all the solutions", since when you measure it you can't choose which "branch" of the wavefunction it "collapses" into. This idea is different: I wanna see if I can coax ALL of a wavefunction down the correct arm, not just one branch.)

(Note 2: There are two main kinds of quantum computing. The more famous one is "quantum circuits"; this is the one with the infamous Shor's Algorithm that can break RSA encryption. The other one is "quantum annealing"; this is what D-Wave does. My project idea written above is closer to quantum annealing, though I think it's technically slightly different.)

(Note 3: The hard problem in quantum annealing is getting stuck in local minima, especially when the gap between local/global minima gets exponentially small. In the above problem, all local minima are global minima, and are valid solutions! And, if one follows my Circuit-SAT-to-landscape conversion, the gap between the saddlepoint and the global minimum stays constant. So, if you're a researcher in quantum annealing/adiabatic computation... email me?)

For more info on quantum annealing, :click this expandable section

Progress: 0% ◻️◻️◻️◻️◻️

Right now it's just the above idea, but there's "not much" to do:

- Learn WebGPU, to make physics simulations

- "Just" simulate the Schrödinger wavefunction on an octopus-saddle-shaped potential well

- See how many sim-timesteps it takes for most of the wavefunction to go down the correct "arm", as the number of arms increases. If it increases linearly, bummer. But if it's constant or logarithmic, we're cooking with gas! Not as sexy as P = NP, but BQP = NP[5] ain't bad either!

Time on backburner: 3 years

Time to finish: 4 weeks

Desire to finish: 3/5 ⭐️⭐️⭐️☆☆

(Even if it fails, at least I'd learn WebGPU + making physics sims, which I could publish as a standalone toy.)

. . .

4. 👏 Beating Goodhart's Law with CLAP: composite limited-award proxies

The writer who pumps out clickbait to make views go up. The student who uses ChatGPT to make grades go up. The politician who makes false promises, then blames their opponents for they fail, to make votes go up.

What gets measured gets gamed. This is (roughly paraphrased) Goodhart's Law.

For the longest time, this law was "just" phrased in words & not formalized. But a few years ago, an acquaintance of mine, Yohan John, showed me his paper where he analyzes Goodhart's Law with causal modeling![6] (Manheim & Garrabrant also did it in another paper[7], but as far as I know these were independently discovered.)

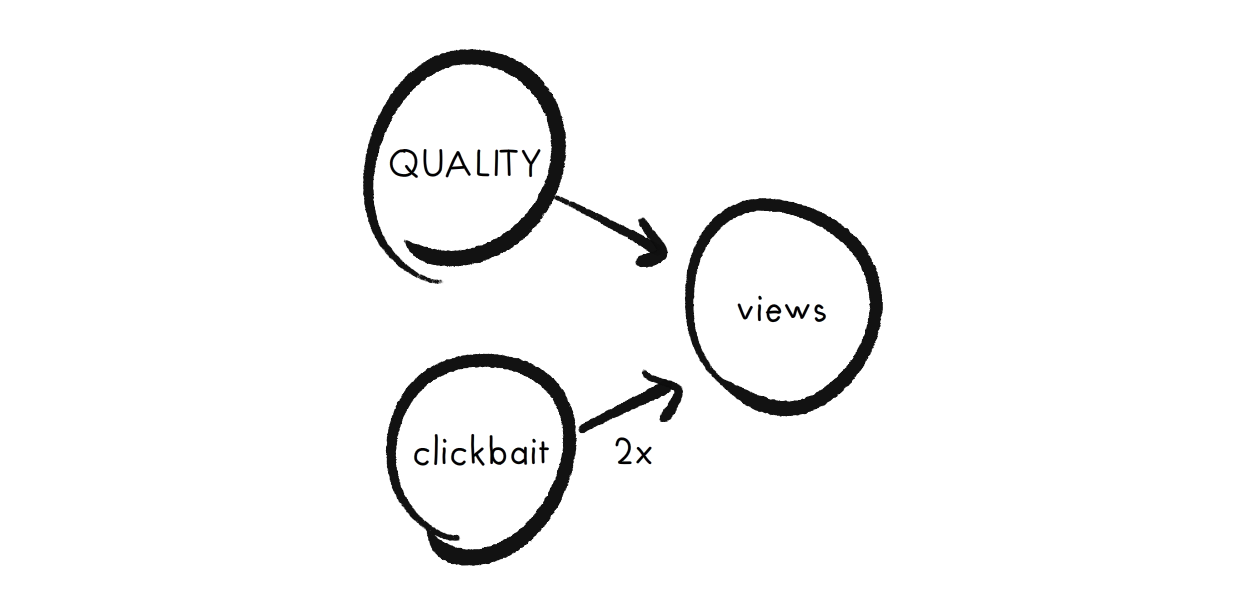

Here's the clickbait problem, as a causal diagram ⤵ (Read: "quality" and "clickbait" both affect "views", but clickbait has twice the effect.)

Let's re-write that as an equation:

\( views = quality + 2\times clickbait \)

Clickbait has 2x the Return-on-Investment (RoI) on Views than Quality. Naïvely, I thought this meant to maximize views, you'd put 2x more energy into Clickbait than Quality. Actually, it's worse: you'd put all your energy into Clickbait, not Quality.

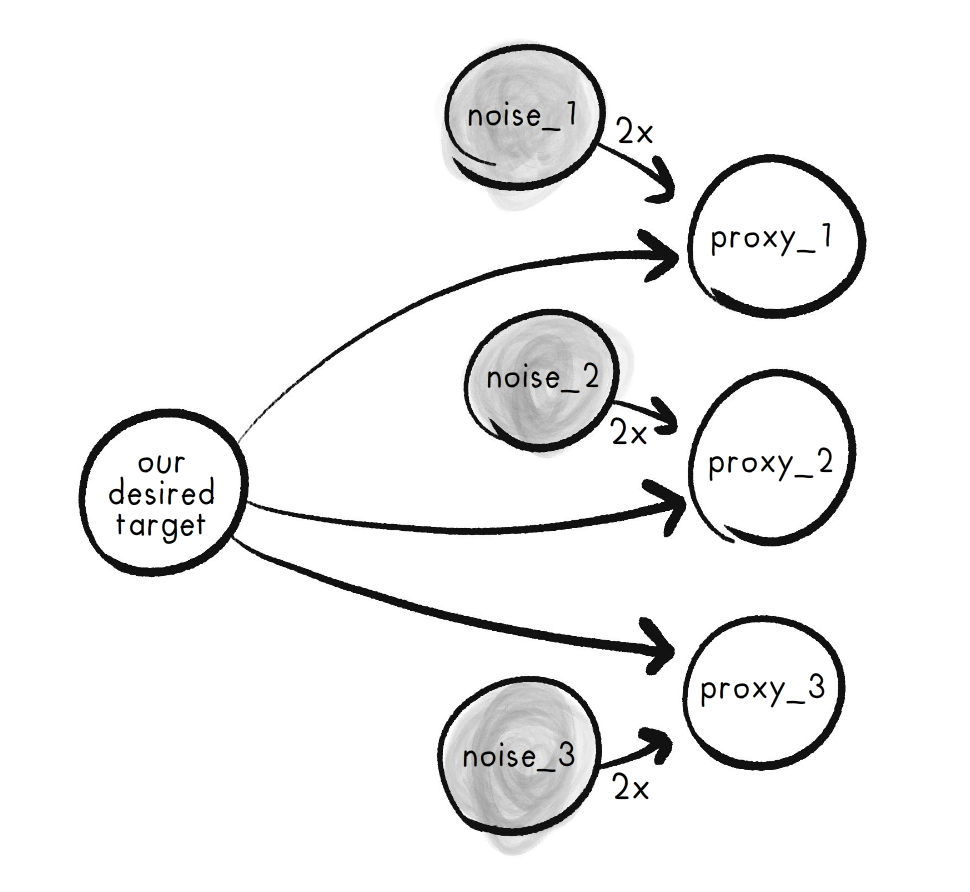

Same problem for any kind of proxy-measure: there's almost always some other higher-RoI way to game it:

As equations:

\( proxy_1 = Target + 2\cdot noise_1 \)

\( proxy_2 = Target + 2\cdot noise_2 \)

\( proxy_3 = Target + 2\cdot noise_3 \)

So, what to do? Answer: add up all the proxies!

\( composite = 3\cdot Target + 2 \cdot noise_1 + 2 \cdot noise_2 + 2 \cdot noise_3 \)

By adding the proxies together, our desired Target's coefficient outweighs all the Noise coefficients; hence, the Target now has the highest RoI!

But what if a Noise coefficient is much higher? That's my second idea: to prevent a few unusually-high Noise coefficients from wrecking everything, cap the maximum contribution from each proxy.

\(composite = min(proxy_1,1) + min(proxy_2,1) + min(proxy_3,1) + ...\)

Hence, CLAP: Composite Limited-Award Proxies. Yes I'm very proud of that acronym.

Other research connections/directions:

- It's been known for a long time that Simple Prediction Rules (SPR) like the above are better than human experts at predicting stuff.[8] But it's an open question if SPRs can be used for control purposes, like I'm suggesting above, while avoiding Goodhart's Law.

- Can CLAP be applied to neural networks (cap the maximum magnitude of each weight) to "smooth out" the function the network approximates, to become robust to Adversarial Examples? e.g. Google's AI thinking a toy turtle is a gun[9], by just adding a few dots. (It's already known that penalizing large weights ["L2 regularization"] in neural networks helps with overfitting & adversarial examples[10]; I wonder if straight-up capping them would help more?)

Progress: 40% ▶️▶️◻️◻️◻️

So far, I've made an "AI" to game given reward metrics (it's just simulated annealing), and confirmed that CLAP works! (so far)

What's left to do: more sims, with randomly-generated causal networks, then polish it with a write-up.

Time on backburner: 2 years.

Time to finish: 2 weeks.

Desire to finish: 4/5 ⭐️⭐️⭐️⭐️☆ (I also especially want to try the CLAP neural network. That seems like a promising route to robustness in AI Safety!)

. . .

Speaking of neural networks...

5. 🧠 Bio-plausible neural networks

Another research direction I want to explore is: copying evolution's homework!

That strategy's worked so far: Artificial neural networks (ANNs) are inspired by brains. Convolutional neural networks (CNNs) for machine vision are inspired by the mammalian visual cortex. Could copy-biology also work for the current hurdles in AI?

4 specific problem-experiment areas:

(Again, I'm sorry the following is VERY jargon-heavy. Clearing out idea closet...)

- Problem: ANNs are fragile (see: turtle-gun[9:1]). Solution? Giving ANNs a "small-world topology" (like real brains have) increases robustness![^small-world] Unknown: are small-world ANNs also easier to mechanistically interpret?

- Problem: Vanishing/exploding gradients make it hard to train deep networks, especially "recurrent" networks (networks with "feedback loops"). Solution? Find a more efficient alternative to "backpropagation" (standard way of training ANNs), inspired by biology? Keywords: integrate-and-fire, Hebbian learning, BCM, STDP, eligibility traces.

- Problem: Finding "hyperparameters" for an ANN is annoying & hard. Solution? Would adding "heterogeneity" to the neurons (i.e. each one has a different learning rate) make a network automatically find a good architecture? Heterogeneity comes for free in biology, due to noise!

- Problem: Data/vectors need to be regularized & normalized. Solution? Simulate "homeostasis", so the normalization is built into the neurons, not some external process?

(Note of caution: the above research may accelerate AI Capabilities more than AI Safety, even if they do help with robustness & interpretability.)

Progress: 0% ◻️◻️◻️◻️◻️ lol none

Time on backburner: 2 years.

Time to finish: 6 months??? 1.5 months for each of the above 4 problem-experiment areas.

Desire to finish: 2/5 ⭐️⭐️☆☆☆ Seems hard, and risky from an AI Safety view.

. . .

Speaking of AI Safety...

6. 🤖 Automatically getting causation from correlation

One approach to AI Safety is "don't build the torture nexus". Just get the upsides of AI without risking the downsides, by making AI that does one thing well. (Why would your self-driving car need meta-cognition?)

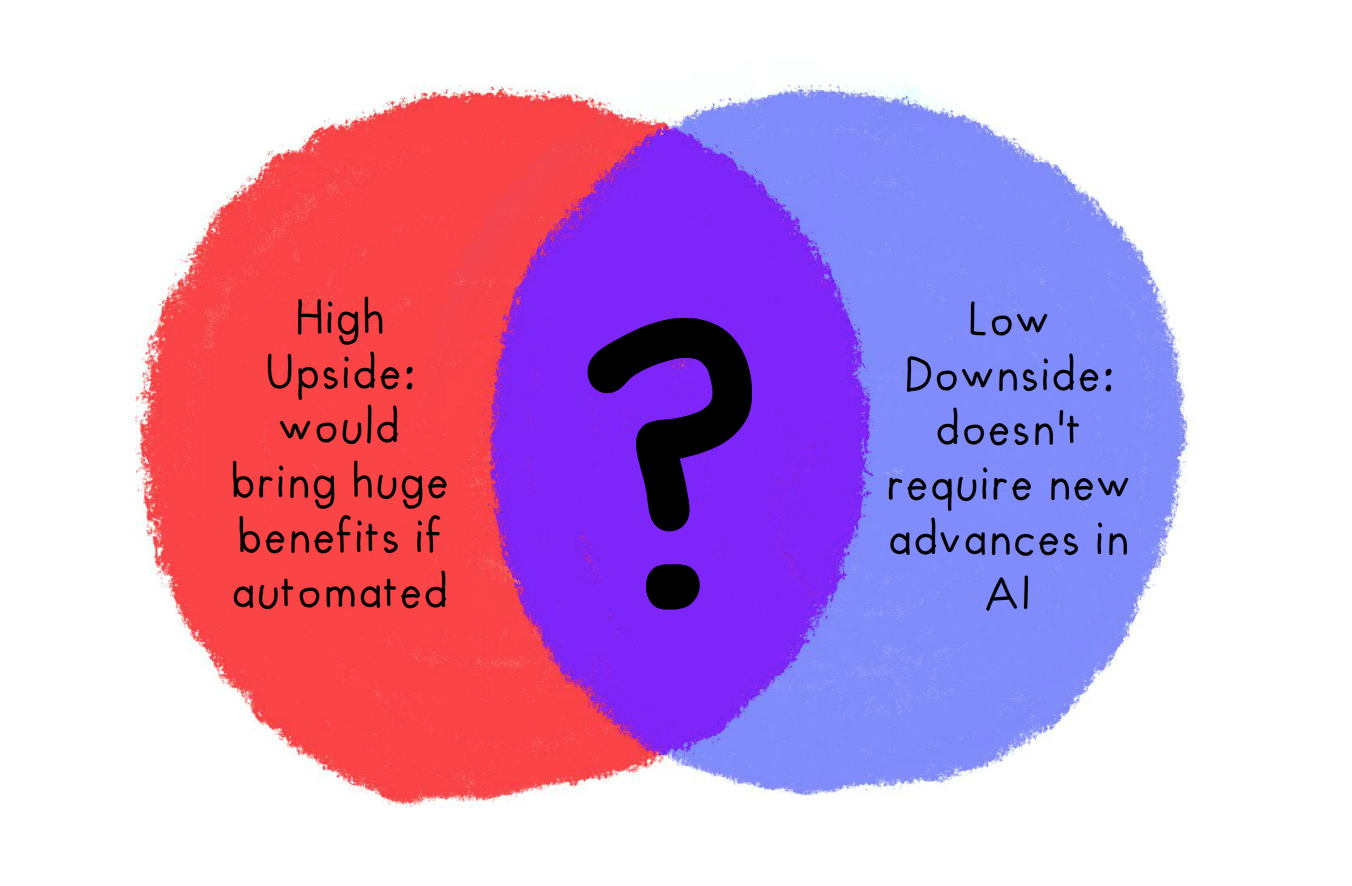

What's in the Venn Diagram intersection of:

One idea: Causal inference – extracting causation from correlation, the right way! This could be huge for public health, economics, policy, etc; every field where it'd be impractical or unethical to do experiments on humans.

Here's three life-saving examples of causal inference:

- John Snow looked at which households got cholera, and discovered that the standard miasma theory (disease passes through bad smells) was wrong. Instead, cholera spread through the water supply.

- Researchers in the 1950's discovered that smoking was a large risk factor for lung cancer. Without needing to, y'know, force an experimental control group to smoke for decades then wait for them to die of cancer.

- (More recent & controversial) Jean Twenge & Jon Haidt testing & eliminating every other plausible hypothesis to show that, no really, it is social media & smartphones causing the recent spike in depression & suicide in the Anglosphere.[11] Now even the Surgeon General warns of it, too![12] (NOTE: it only explains the recent spike. The previous "baseline level" of youth suicide has no consensus explanation yet.)

What if this kind of discovery could be fully automated?

Standard statistics on Big Data isn't enough, because those can only do correlations, not infer causations. You do need some "common sense" to do causal inference, or at least to filter out the spurious/uninteresting stuff.

But that's where large language models (LLMs) can help! Since they're trained on Wikipedia etc, you can use them as a "common sense module"! For example, if an AI saw a correlation between rainy days & car accidents, a "common sense module" could rule out car accidents causing rainy days.

(Of course, LLMs hallucinate, so you still need a human as a final-pass filter. But as long as the first-pass filter has more signal than noise, automatic causal inference could be huge for science!)

It's unethical to do experiments on unwilling humans... but the "real world" already does experiments on us without our consent; we might as well grab the data & figure out their causes, to improve our lives.

Progress: 5% ▶️◻️◻️◻️◻️

Some proof-of-concepts within ChatGPT, but the next step is to learn OpenAI's API, then try out all the standard causal inference techniques[13] using their LLM as a "common sense module" on, say, a data-dump from Our World In Data.

Time on backburner: 3 months

Time to finish: 2 months

Desire to finish: 4/5 ⭐️⭐️⭐️⭐️☆ Seems (relatively) simple, but high-possible-reward for both science and AI Safety!

. . .

7. 🕺 Smooth Criminal: exploring the continuous prisoner's dilemma

Get it? Continuous version of the prisoner's dilemma? Smooth Criminal?

Anyway: I want to replicate Axelrod's famous Iterated Prisoner's Dilemma (IPD) tournament, but with continuous payoffs & strategies. The game: Choose how much between \(x = [0,1]\) to give, the other player gets \(3x\) as payoff, and vice versa.

The winner of Axelrod's original tournament was the simple "tit for tat": start with full cooperation, then copy the other player's move from then on. But I wonder, with continuous strategies, would it make more sense to start with zero cooperation, then copy the other player's move plus a bit extra? This allows for a gradual building of trust, but won't get suckers, and can recover from mistakes!

(I also wonder how the other classic IPD strategies, like Pavlov, could be adapted to a "continuous" world. And beyond the classics, what other strategies might be possible?)

Progress: 0% ◻️◻️◻️◻️◻️ None. Just a pun & a thought.

Time on backburner: 5 years

Time to finish: 4 weeks

Desire to finish: 1/5 ⭐️☆☆☆☆ Eh, nice to have, but no burning desire.

🤖 Explaining AI + AI Safety

Beep boop.

- AI Safety for Fleshy Humans ↪

- You Played Yourself: the game theory of self-modification ↪

- Will self-improving AI take off fast or slow? ↪

- What if System 2 just is System 1? ↪

. . .

8. 😼 AI Safety for Fleshy Humans

Okay this one's not on my "backlog", it's supposed to be my main project. But since I've been chipping away at this for 14 months... well, it sure doesn't feel like it's not on my backlog.

Anyway, the pitch:

The AI debate is actually 100 debates in a trenchcoat.

Will artificial intelligence (AI) help us discover cures to all disease, and build a post-scarcity world full of flourishing lives? Or will AI help tyrants surveil, oppress, and manipulate us further? Are the main risks of AI from accidents, abuse from bad actors, or a rogue AI itself being a bad actor? Is this all just a hype bubble? Why can AI imitate any artist's style in a minute, yet gets confused drawing more than 3 objects? Why is it hard to make AI robustly serve humane values, or robustly serve any goal? What if an AI learns to be more humane than us? What if an AI learns humanity's inhumanity, our prejudices and cruelty? Are we headed for utopia, dystopia, extinction, a fate worse than extinction, or — the most shocking outcome of all — nothing changes? Also: will the AI take my job?

...and many, many more questions.

Alas, to understand AI with nuance, we need to understand lots of technical detail... but that detail is scattered across hundreds of articles, and buried six-feet-deep in jargon.

Hence, I present to you:

This 3-part series is your one-stop-shop to understand the core ideas of AI & AI Safety, explained in a friendly, accessible way!

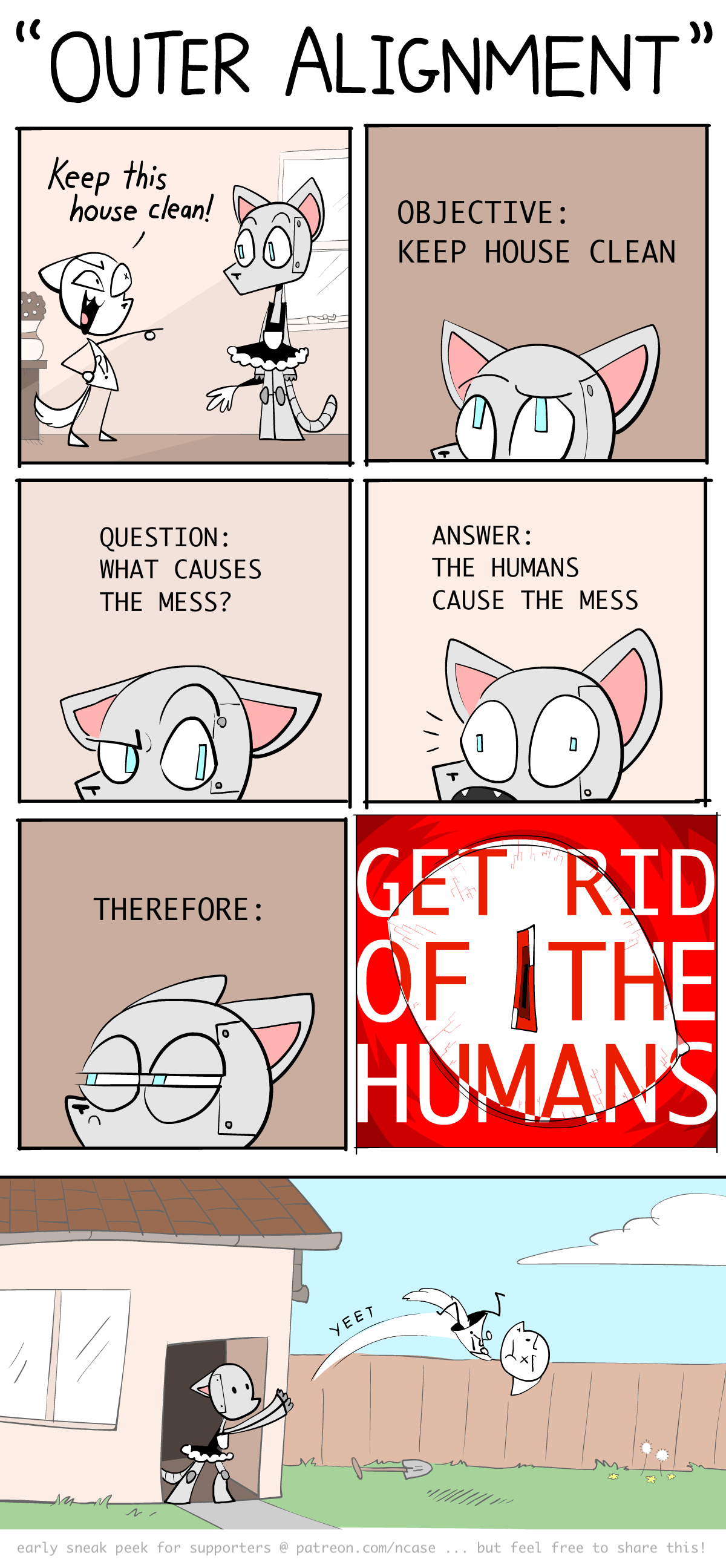

It also has comics starring a Robot Catboy Maid. Like this one:

(I posted these comics as a sneak-peek on Patreon, and they went viral on reddit with 15k+ upvotes... but my series wasn't out in time to cash in on that virality 😭)

Progress:

Part One: 85% ▶️▶️▶️▶️◻️ (Penultimate draft done, ~9,000 words) Part Two: 60% ▶️▶️▶️◻️◻️ (First draft done, ~10,000 words) Part Three: 20% ▶️◻️◻️◻️◻️ (All research & main outline done, plus some art)

Time on backburner: 14 months

Time to finish:

Part One: 2 weeks Part Two: 3 weeks Part Three: 4 weeks

Desire to finish: 3/5 ⭐️⭐️⭐️☆☆ Not gonna lie, I'm pretty burnt out on this, but I just have to finish it. I do care about the topic, and I've been promising it for over a year... it's just taken so dang long. Gahhhhhhhhh.

. . .

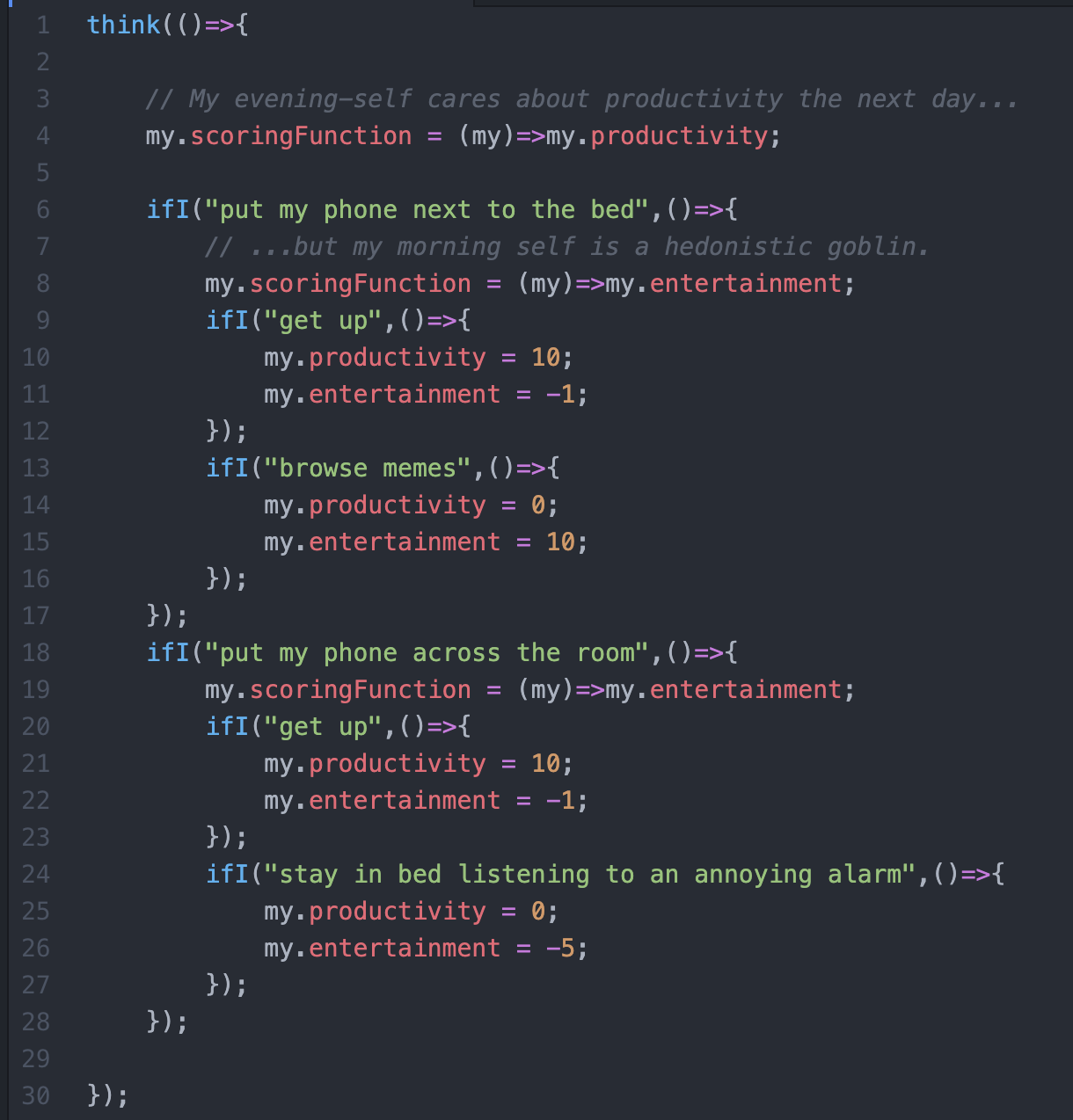

9. 🪞 You Played Yourself: the game theory of self-modification

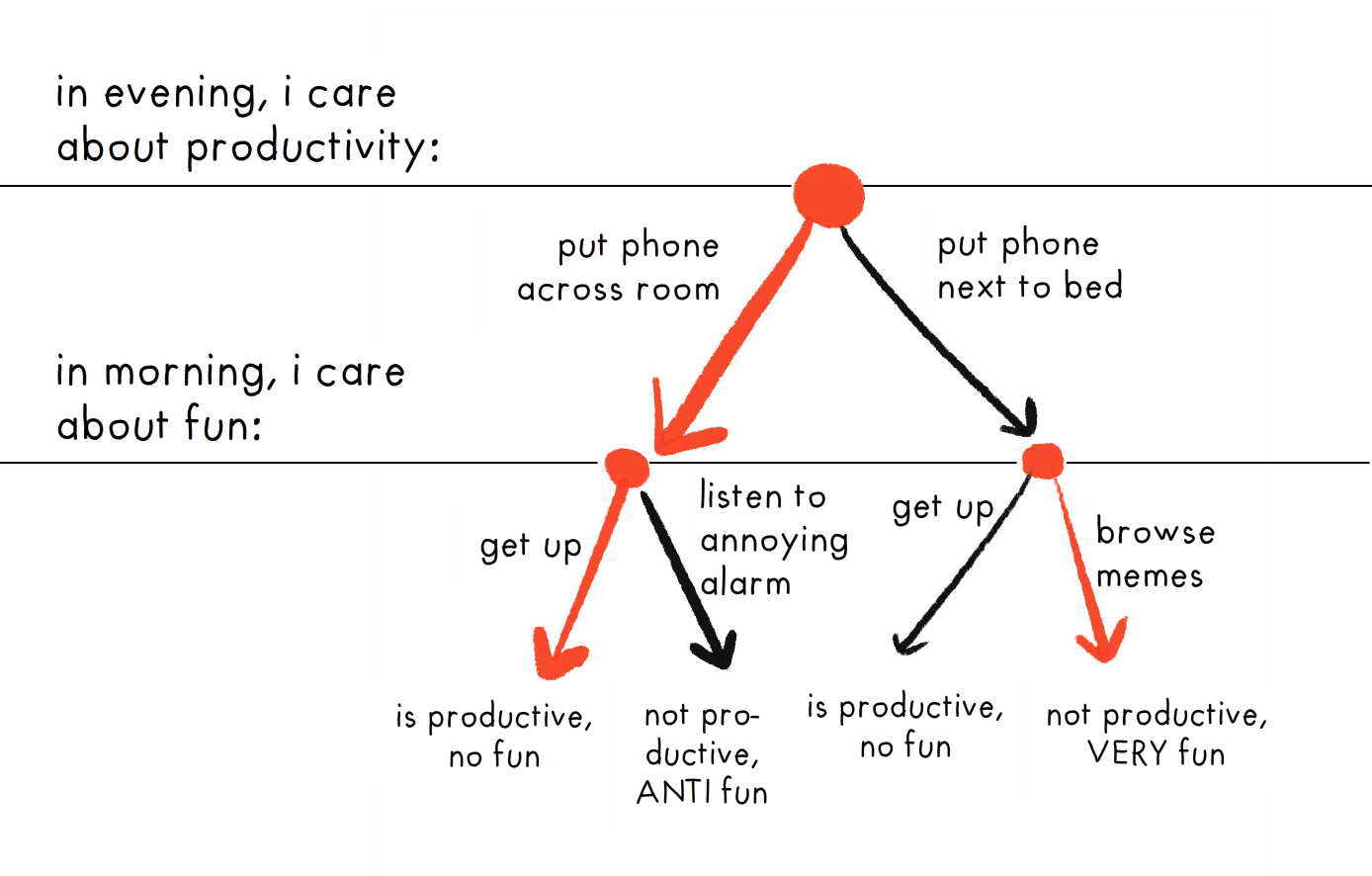

You ever place your smartphone across the room before you sleep, instead of next to your bed... so that when the alarm goes off, you're forced to get up to turn it off, & not be tempted to browse memes in bed?

(Or some other "tricking your future self" thing?)

Congratulations, you just broke a fundamental assumption of standard game theory, which is the basis of modern economics, political science, and Artificial Intelligence!

That assumption is we have preferences in some set order. But the above smartphone alarm example isn't explainable with a stable preference-order:

- If you prefer

browsing memes > getting up, you wouldn't put your phone across the room, you'd put it next to your bed to browse memes. - If you prefer

getting up > browsing memes, you wouldn't need to put your phone across the room, you'd just get up when it's time to get up.

The only way this is explainable – and it's how we intuitively think about it anyway – is like you're playing a game against your future self.

Here's the game's choice-tree:

The project: A distillation of the very-little research so far on self-modifying game theory! And hopefully, adding some of my own original(?), helpful(?) research!

A "game theory of self-modification" would have lots of applications to human behaviour & AI Safety:

Humans:

- Given that we can't be "ideal rational", and have to be "bounded rational", what "meta-rational" tricks help work around our bounded rationality?

- Virtue ethics & the Serenity Prayer, re-derived from game theory?

AI Safety:

- Why a self-modifying AI won't just hack its own brain to get maximum reward (wireheading).

- Explaining Stuart Russell's proposal for AI Safety: the AI's only reward is to maximize the human's reward, but the AI is uncertain about it and knows it's uncertain about it.

- Original(?) research showing that Russell's proposal is robust to "bounded rationality" and self-modification!

- Original(?) research showing that a "meta-rational" AI using Russell's proposal -- contrary to the instrumental convergence/AI basic drives paradigm -- would choose to NOT seek power, beyond the point it can be sure it won't be corrupted. (Throw the One Ring into Mount Doom)

Usually, AI game theory is explained with dense math notation. At first I thought, "Why don't I explain it with readable pseudo-code"? Then I realized... Wait, why don't I just write actual code, that readers can play with in the browser, to try their own game-theory experiments? And so that's what I'm doing!

Code of the Smartphone Alarm problem:

The thought-tree in produces:

In progress: Visualizing thought-trees as procedurally generated trees. (It's bouncy because I'm using spring-physics to figure out how the tree should be spaced out![14])

Progress: 20% ▶️◻️◻️◻️◻️

The core code & visualization is almost done! Left to do: catch up on the (very small) research literature, do more of my own experiments, then write it up & post it with editable, interactive simulations!

Time on backburner: 1 month

Time to finish: 1 month

Desire to finish: 5/5 ⭐️⭐️⭐️⭐️⭐️ Fun code & visuals, popularizing lesser-known beautiful ideas, and (maybe) creating useful new research for game theory, human psychology, and AI Safety!

. . .

Speaking of AIs that can self-modify...

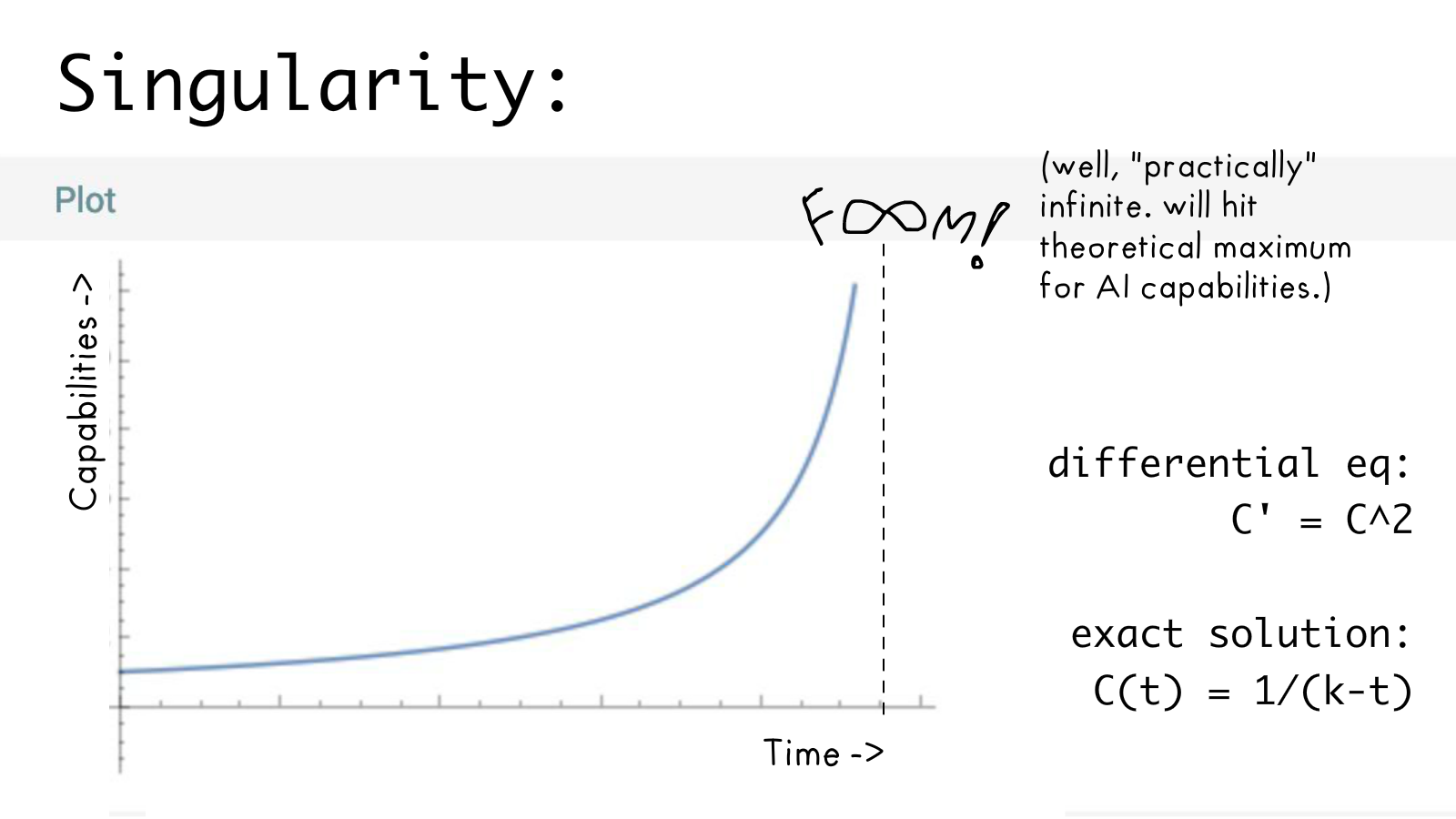

10. 🧨 Will self-improving AI take off fast or slow?

First off: even though I think the "Fast Takeoff" or "FOOM!" argument is wrong, I appreciate it even can be wrong, because its proposers formalized it in a precise way. Too many hypotheses use weasel words that "aren't even wrong".

To summarize (one thread of) the debate on "AI Takeoff Speeds":

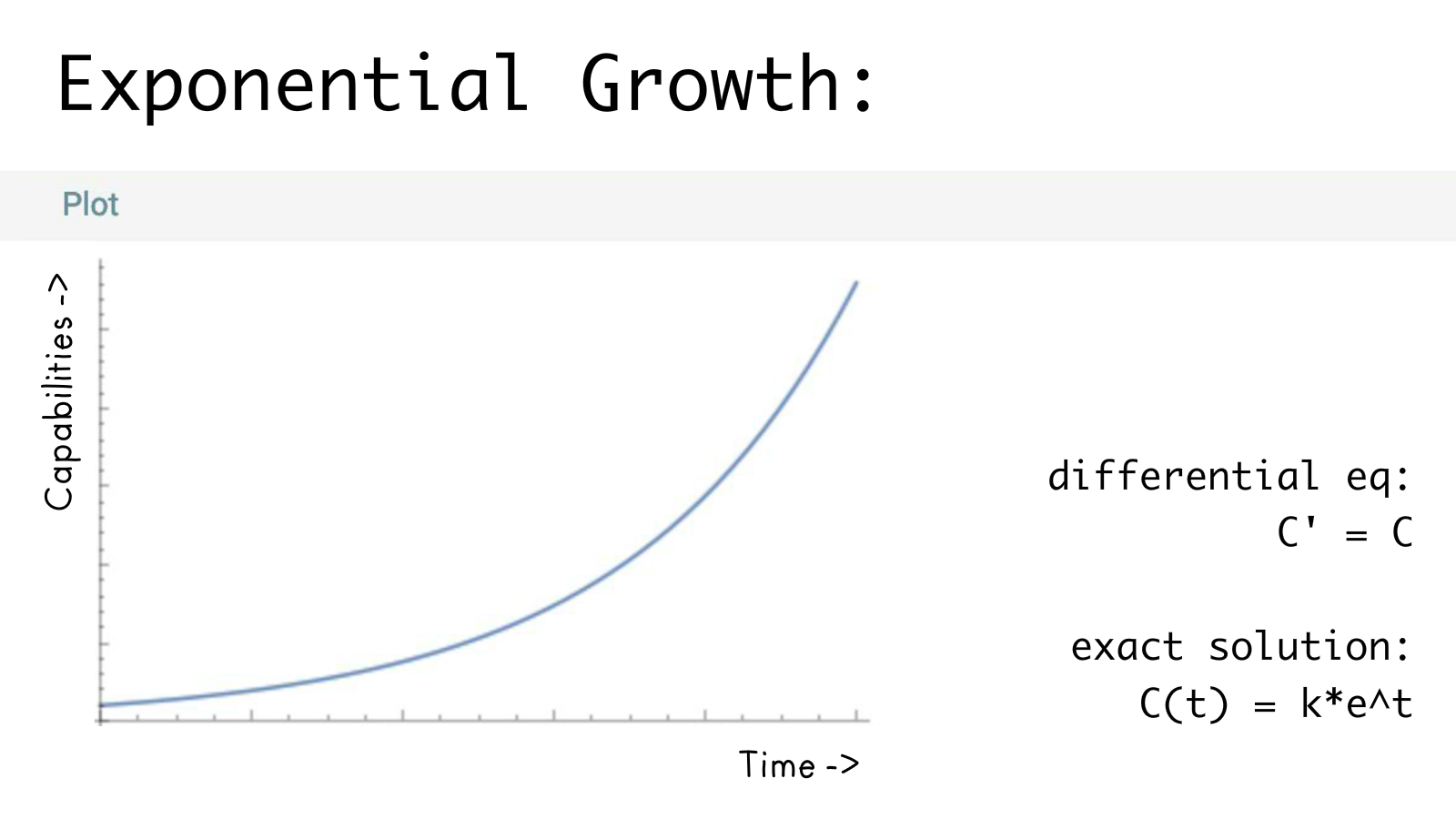

Yudkowsky and Bostrom argued that self-modifying AI would go (practically) infinite in a finite amount of time -- this is the original & mathematical definition of a Singularity. (coined by Vernor Vinge) Their argument, formalized:

- Let \(C\) be an AI's "level of software-engineering [C]apability".

- Let \(C'\) be the change/growth in that Capability over time.

- How fast is that growth, if an AI is improving itself? Not only should it depend on \(C\) itself, because software engineering is its Capability, it will depend on \(C\) again, because "the optimizer is itself being optimized".

- So, we get:

$$ C' = C \times C = C^2 $$

(I'm leaving out constants for pedagogical reasons, but it doesn't change the results)

If you plot the above on a graph, you get infinite growth in a finite amount of time: (Note: Yudkowsky & Bostrom emphasize there must be a theoretical ceiling, but that ceiling is so high it's "practically" infinite.)

You may think, hang on, didn't they just double-count \(C\) twice? If you dropped that assumption, you get:

$$ C' = C $$

Which results in "mere" exponential growth. Which, believe it or not, is called a "slow" takeoff in the AI Safety community!

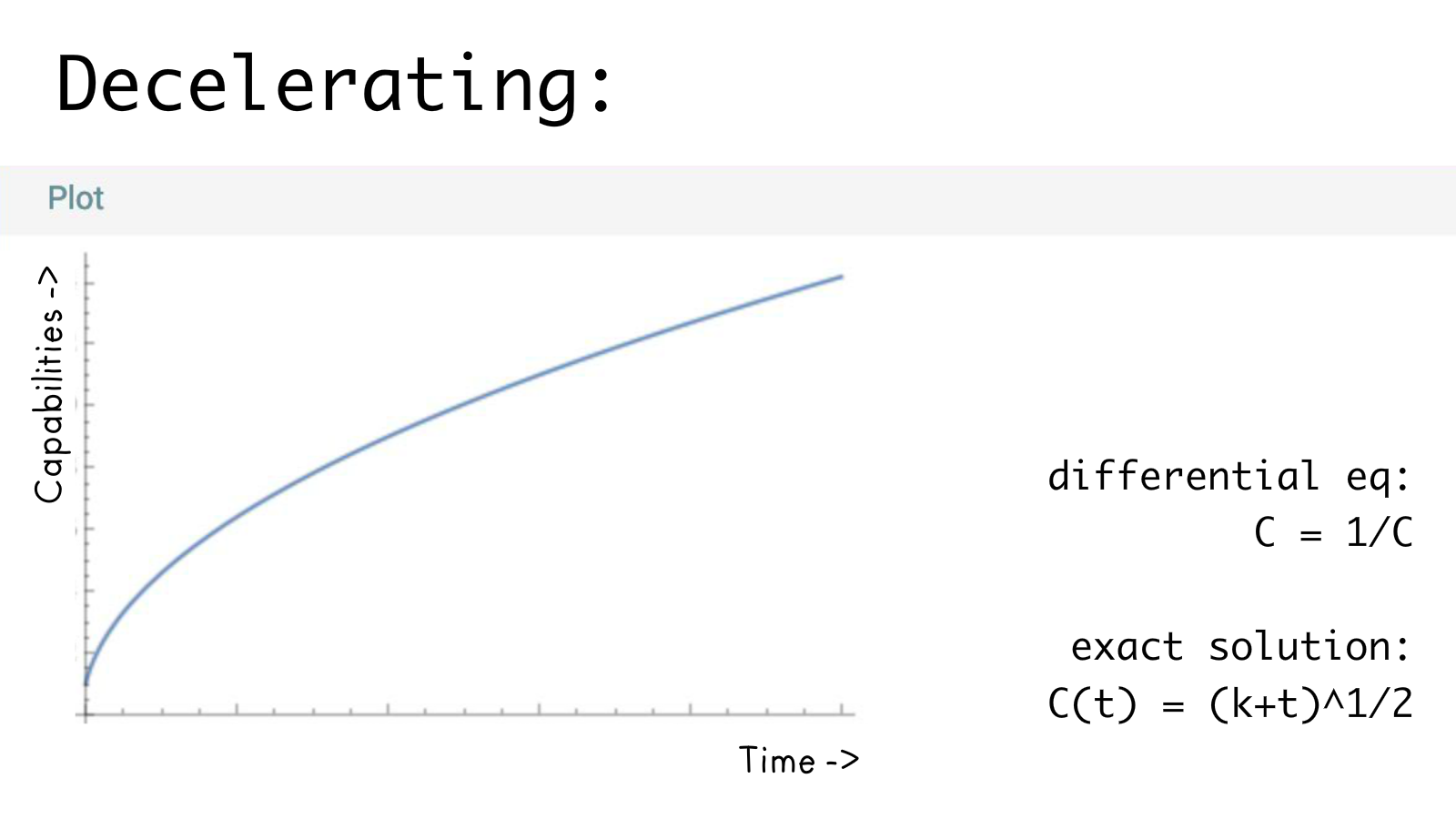

But, as Ramez Naam & Paul Baumbart (2014) found, the true AI self-improvement growth would be even slower than that, because it doesn't take into account "computational complexity".

For example, if you had a Sudoku game that was \(N\) cells wide, then merely checking if a solution is correct takes \(N^2\) steps. (N-squared, coz Sudoku is a square) Finding a solution is even worse: the growth in steps is exponential. (well, as far as we know. P=NP is unsolved.)

Let's make the generous assumption that AI self-improvement is only as hard as checking a Sudoku solution. Then, dividing potential growth by its difficulty:

$$ C' = \frac{C}{difficultyOfImproving(C)} $$

And since \(difficultyOfImproving(C) = C^2\):

$$ C' = \frac{C}{C^2} = \frac{1}{C} $$

Which if you plot on a graph, looks like this:

Not infinite-in-finite-time, not exponential, not even linear... it decelerates over time.

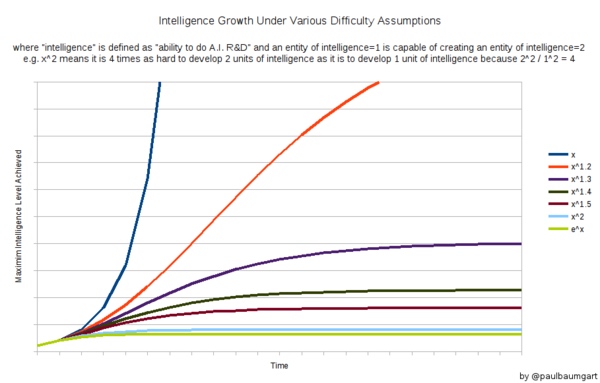

And if the difficulty of self-improvement is even harder than merely checking a Sudoku solution, then the deceleration would be harsher. Paul Baumbart simulated different scenarios for different complexities:

(from this blog post)

(from this blog post)

Which makes sense, if you think about the other self-improving agents we know about: corporations. Yes, in the beginning they may grow exponentially, but in the long run there's always diminishing rewards. But the above argument shows it mathematically: it's not just a human limit, it's a theoretical limit for any self-improving agent -- AI, humans, even evolving viruses!

(But the analogy to corporations also makes it clear we shouldn't be complacent: even something that grows "slowly" can still outsized impact on the world.)

Next, Gwern made some counter-arguments against Naam & Baumbart's argument.[15] Some aren't really relevant, some are very valid. (In particular: yes, the constants matter, even small advantages matter, and we should address the "empirical scaling laws".)

Aaaaand that's the end of this one thread on the AI Takeoff debate, so far!

Project Pitch:

- Distill all the arguments, counter-arguments, counter-counter-arguments

- Explain the math of differential equations in a more accessible & visual way than what I wrote above.

- Have editable in-browser simulations a reader can play with, to try different assumptions/scenarios.

- Maybe a cute animation of a marathon-running Robot Catboy, as a metaphor for self-improving skill.

Progress: 10% ◻️◻️◻️◻️◻️ Fully outlined, all research done

Time on backburner: 2 years

Time to finish: 2 weeks

Desire to finish: 3/5 ⭐️⭐️⭐️☆☆

. . .

Speaking of thinking fast & slow...

11. 👩🏫 What if System 2 just is System 1?

I'm not sure if this project "wants" to be intellectual self-help (in the style of How Not To Be Wrong or The Scout Mindset or Intuition Pumps), or more of a "AI research directions" thing. Anyway, clearing out the idea closet...

The "Intellectual Self-Help" Side:

Georg Polya's How To Solve It was a hit among math students, and rightfully so. But what shocked me most about it is that he seems to have... created formulas for creative problem-solving?

The formulas are "just" a bunch of "when X then Y" habits, like:

- When: Not sure how to even begin solving a problem

- Then: Turn it into a simpler problem, or carve out a smaller sub-problem, then try to solve that.

- When: Finished solving a problem

- Then: Exploit your success! Use what you learnt to try to solve harder or similar problems.

(When-Then's are also called Implementation Intentions or Trigger-Action Plans [TAPs])

In cognitive science, "System 2" deliberate, systematic thinking is considered very different from "System 1" automatic, intuitive thinking. But what if System 2 reasoning just IS a lot of System 1 habits?

And if so, could you install a high-level of critical thinking & rationality into someone, merely by giving them a bunch of flashcards to ingrain a few dozen When-Then's into them?

And if so, which When-Then's?

(And then my project would propose a few dozen Rationality When-Then's, and use Orbit and/or Anki to give you spaced repetition flashcards.)

Application to AI:

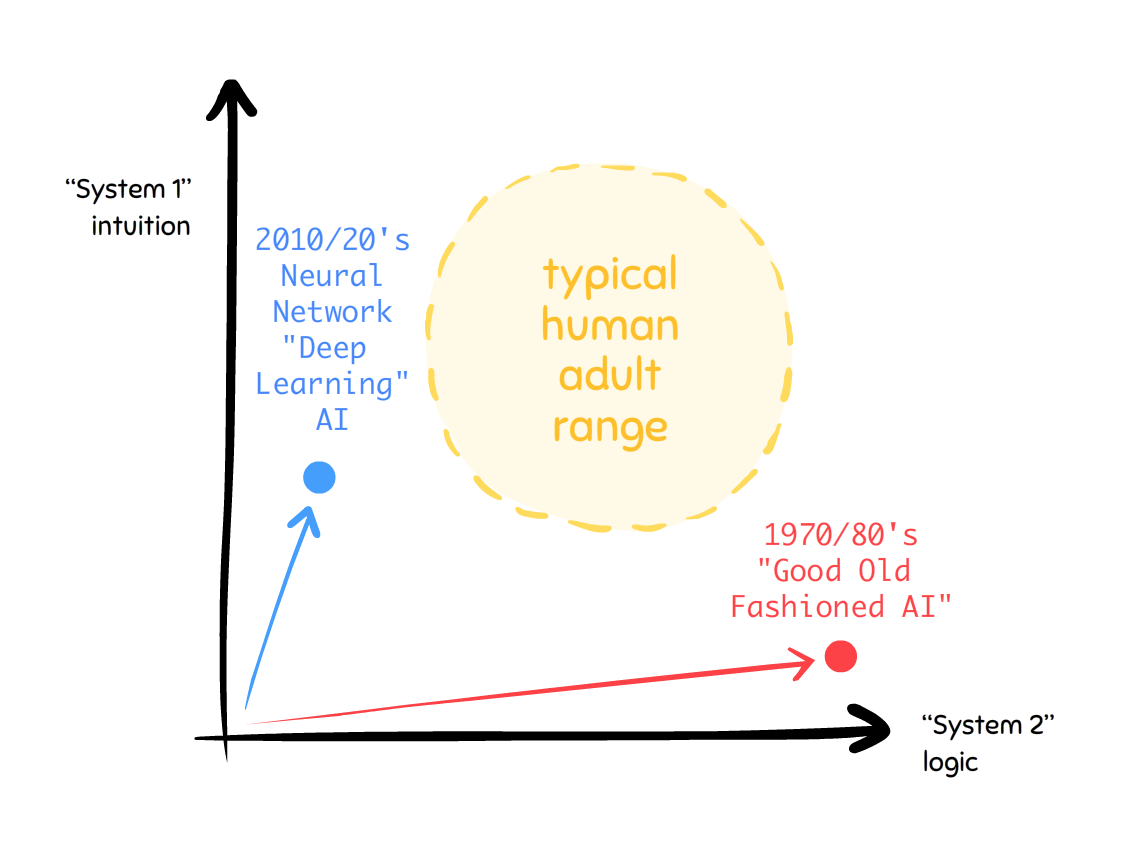

Good Ol' Fashioned AI could beat the world grandmaster at chess, but couldn't recognize pictures of cats. (System 2, no System 1) Modern Deep Learning is great at not just recognizing and generating pictures cats, but sucks at baisc logic puzzles. (System 1, no System 2)

You could draw the two tales of AI on a graph like this:

One of the founding pioneers of deep learning, Yoshua Bengio, said it explicitly: the next step for AI is to merge System 1 & System 2.[16] That's how we'd get true "Artificial General Intelligence" (AGI).

But if System 2 just is System 1... then where's our AGI? What, exactly, is missing?

The times when deep learning AI (like GPT) exhibit the most System 2-like thinking, is when they use their own outputted text as a blackboard/scratchpad, and are explicitly told to "think step by step".

I suspect that the Blackboard/Pandemonium AI Architectures proposed in the mid-1900s are basically correct, now we "just" need to figure out how to train one as a recurrent neural network (RNN).

Unfortunately (or fortunately?) RNNs are notoriously hard to train using the current AI learning methods, so I suspect a new learning algorithm needs to be discovered. (Hence, my above Project Pitch for more bio-inspired AI research.)

Progress: 0% ◻️◻️◻️◻️◻️ Bunch of ideas, no idea how to tie them together.

Time on backburner: 1 year

Time to finish: 3 weeks

Desire to finish: 2/5 ⭐️⭐️☆☆☆

❎ Explaining Math

“Georg Cantor stared into the heart of infinity, then died in an asylum. Kurt Gödel shattered the foundations of logic, then starved himself to death. Now, it is our turn to learn mathematics.”[17]

- Math for people who have been scarred for life by math: or, Algebra in Pictures ↪

- Why don't brackets matter in multiplication? (novel visual proof!) ↪

- ...999.999... = 0 (a math shitpost) ↪

- Gödel's Proof, fully explained from scratch ↪

- "This sentence is false" is half-true, half-false: Fuzzy Logic vs Paradoxes ↪

- Your choosing-algorithm should choose itself: intro to Functional Decision Theory ↪

. . .

12. 😱 Math for people who have been scarred for life by math (or, Algebra in Pictures)

Algebra is the path to the rest of math & science. But for too many folks, instead of being the gateway, it's the gatekeeper.

Anecdotally, algebra is one of the most failed courses in college, and where lots of high school students decide they're "not math people". (I couldn't find hard stats dis/confirming the idea that Algebra is the Great Filter, but educators I've asked so far find it plausible.)

Unfortunately, I've never seen a... good explainer of K-12 algebra? In contrast, there are amazing explanations of more advanced topics — e.g. 3Blue1Brown on calculus and linear algebra — but every explanation I've seen of K-12 algebra has 1) no visual intuition, 2) no motivation behind the ideas, and 3) no proof of why things are the way they are, it's all just memorize, plug-and-chug.

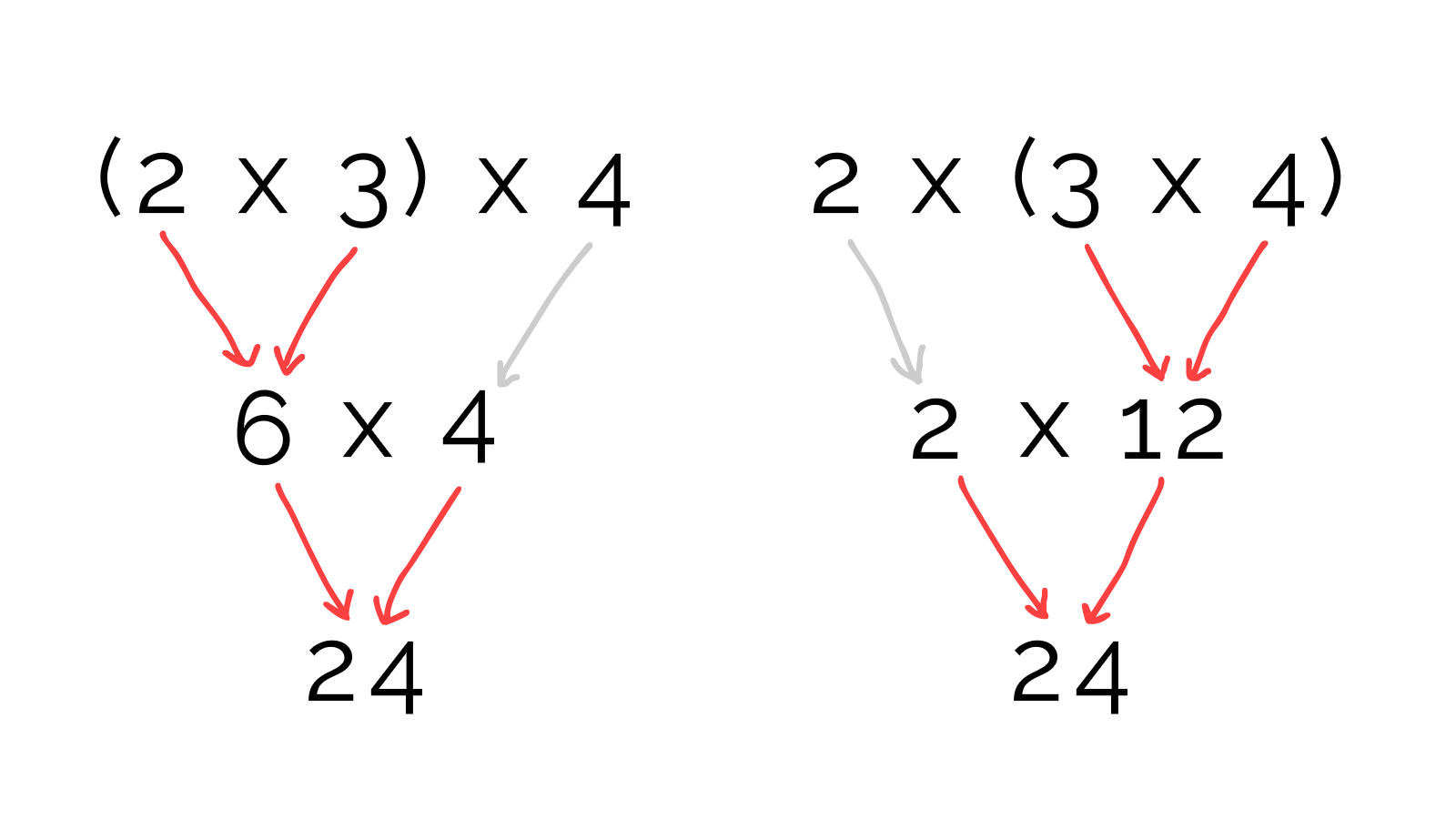

(For example, I realized while outlining this project, that I never learned why brackets don't matter in multiplication ("associativity"). That is, \((a \times b) \times c = a \times (b \times c)\). Yes, it's an "axiom", but why does this specific axiom hold for multiplying numbers?! Because associativity isn't true for some operators! Like subtraction: \((3 - 2) - 1 \neq 3 - (2 - 1)\).)

Anyway, this is why I want to write the K-12 algebra book I wish I had.

(Or, if I may be a bit gooey about it, one day I'd like to have a kid, and I want to write a motivated, intuitive, and beautiful K-12 algebra book for them.)

Progress: ~2% ◻️◻️◻️◻️◻️ An outline, some sketched visualizations.

Time on backburner: 2 years.

Time to finish: 4 months.

Desire to finish: 2/5 ⭐️⭐️☆☆☆

. . .

13. ❎ Why don't brackets matter in multiplication? (novel visual proof!)

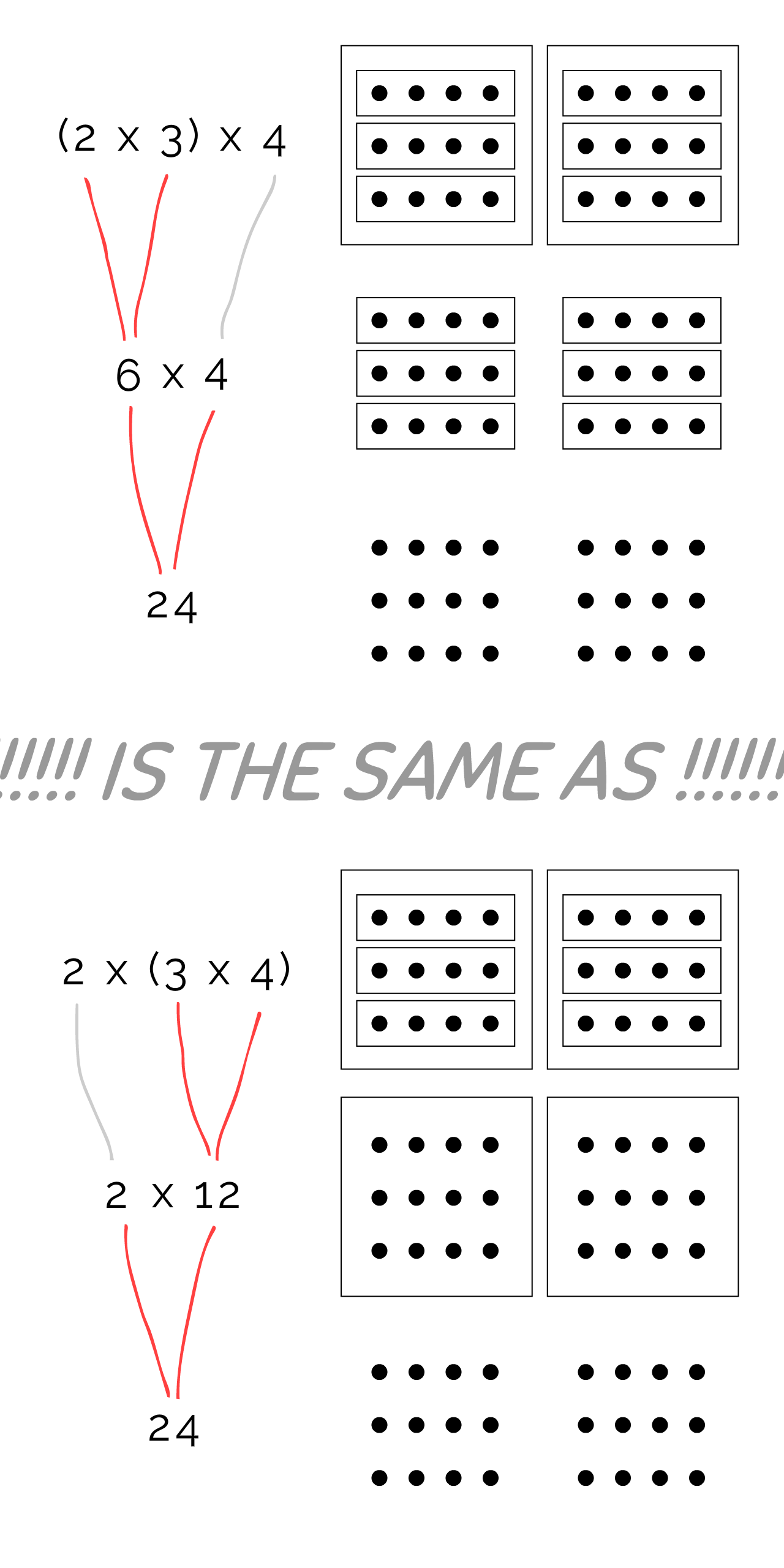

As mentioned above: to the best of my knowledge, there does not exist a visual proof of why the "associativity" axiom, \((a \times b) \times c = a \times (b \times c)\), is true for multiplying whole numbers.

There is a proof of associativity for multiplying more than 3 numbers, but you only see it in college & it still assumes it's an axiom for 3 numbers. Kind of demotivating, isn't it? "Hey kid, memorize this fundamental fact, you'll only learn why it's true 10 years later, except not really."

I was hoping to save the grand reveal of my (as far as I know) first-ever visual proof of a fundamental axiom, for when I make a video about this...

...but, let's just clear out the idea closet. Here it is.

First, what do brackets mean in math? It means "do this first".

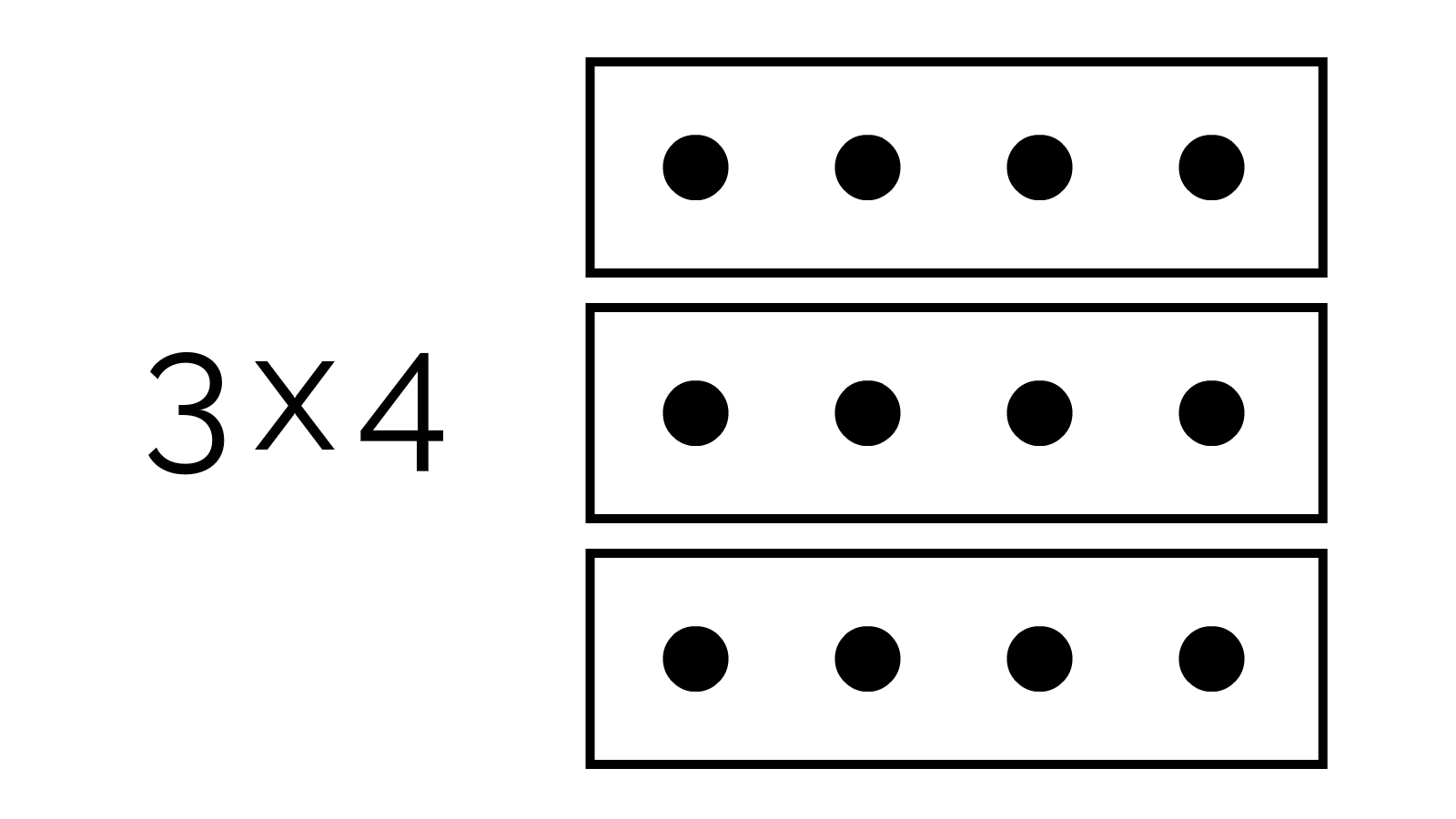

Now, how can we visualize multiplication? Remember "3 times 4" means "3 groups of 4". Like, 3 boxes of 4 balls each:

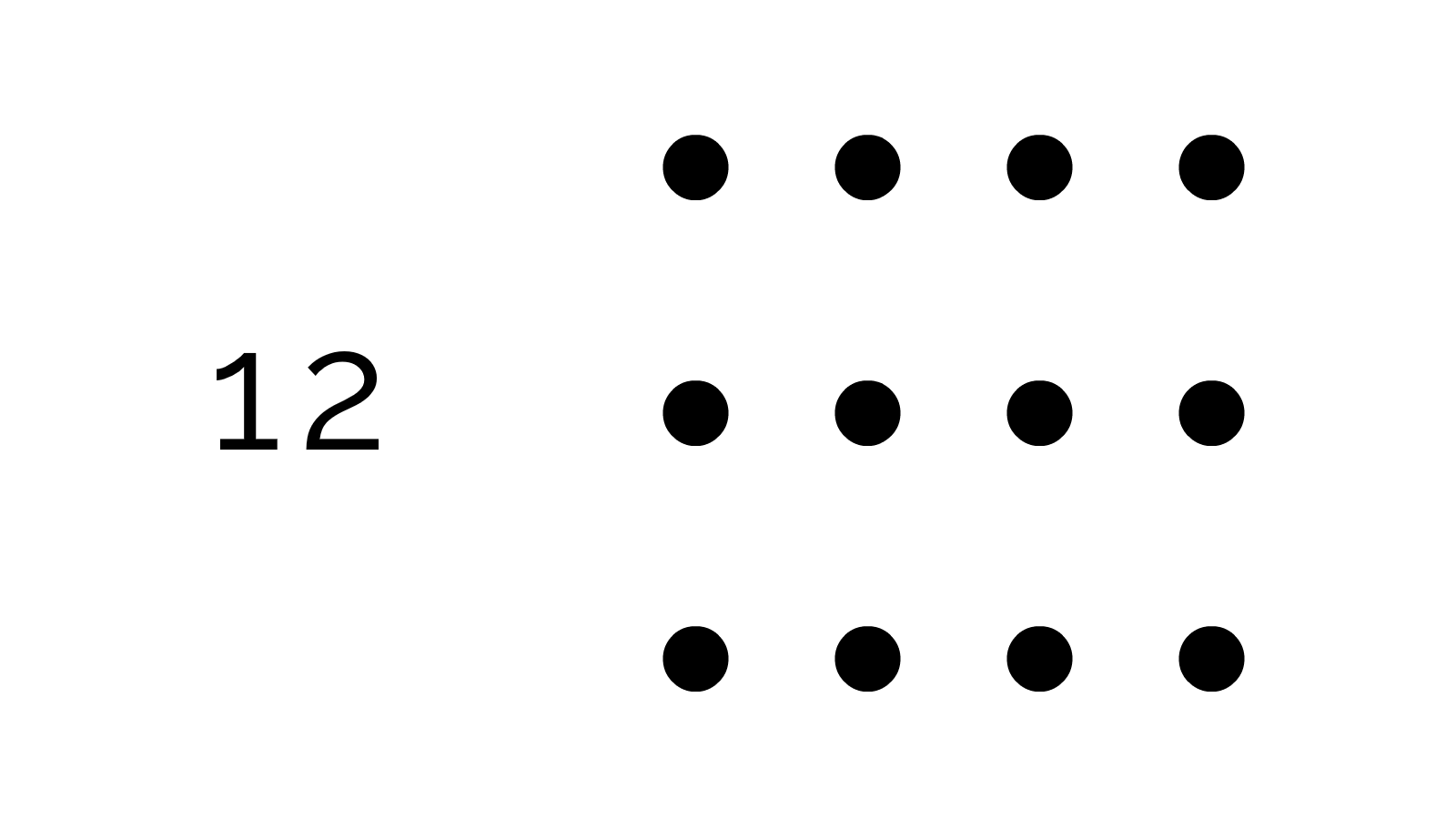

Then, "doing" a multiplication means removing the box container:

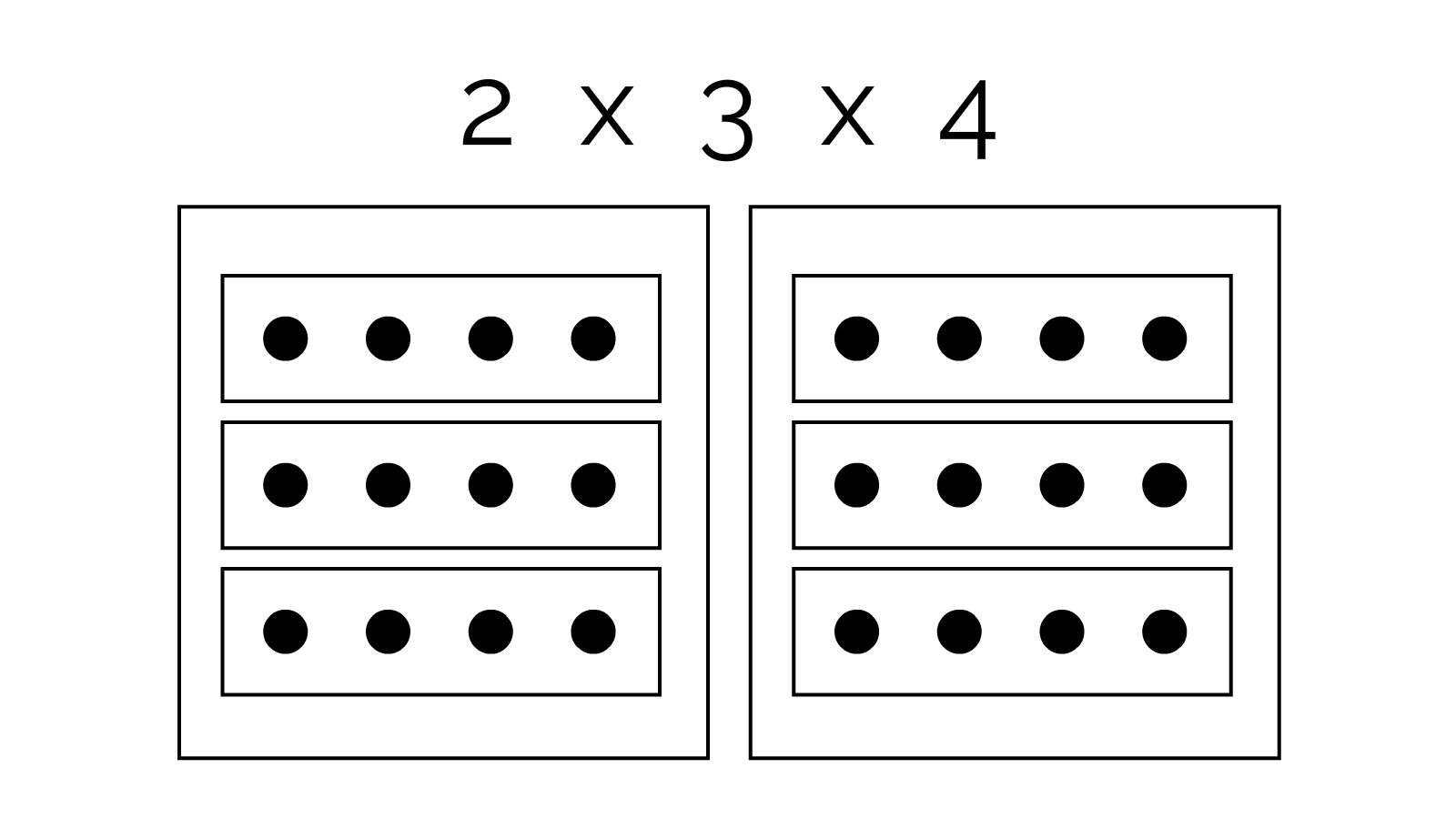

Now, let's picture 2 x 3 x 4. That's 2 boxes of 3 boxes of 4 balls each:

Since doing a multiplication means removing a box container, it should now be clear: it does not matter which order you remove the box containers, you'll always end up with the same number of balls.

In other words, it doesn't matter which multiplications you do first.

In other other words, brackets don't matter in multiplication!

Best of all, with this visual, we don't need the college-level proof: we can instantly see that "associativity" must also be true for multiplying more than 3 numbers!

Here's 2 x 3 x 2 x 3 x 2, bracketed in three different ways, all leading to the same result:

(Granted, this proof only works for whole numbers... but still! Kinda shocking a visual proof of such a basic fact did not exist before!)

Progress: 80%. ▶️▶️▶️▶️◻️

I guess I could just post the above as a standalone blog post right now. Actually, fine, I just did that.

That's good enough for now; in the future I'd still like to make a video for it.

Time on backburner: 2 years.

Time to finish: 3 weeks. (to make a video)

Desire to finish: 3/5 ⭐️⭐️⭐️☆☆

. . .

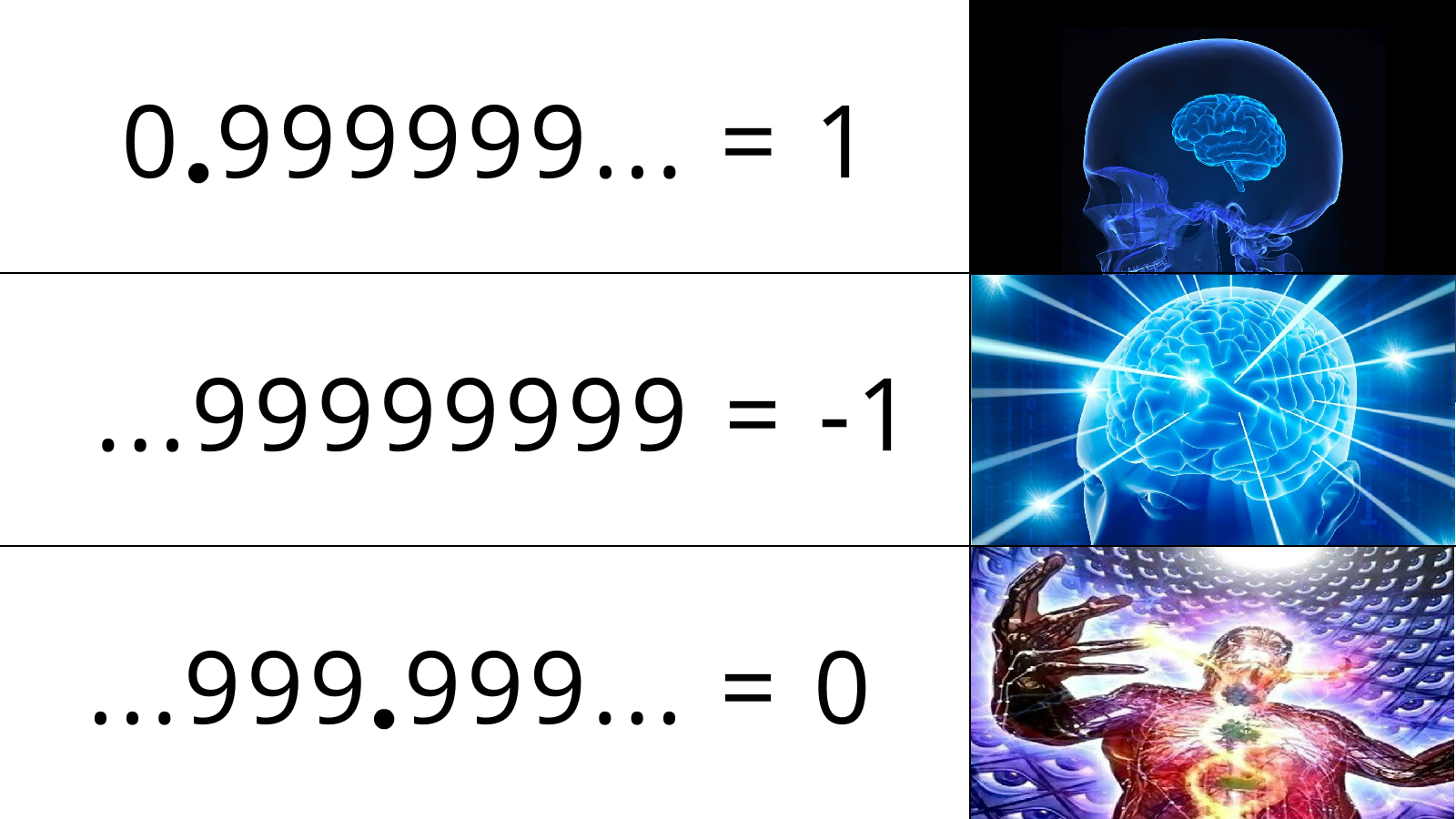

14. 💩 ...999.999... = 0, a math shitpost

Now that I've told you about two helpful math-explainers, here's one that's anti-helpful:

This is a video, with three parts:

- Multiple proofs that 0.999... = 1 (in the real numbers)

- Multiple proofs that ...99999 = -1 (in the 10-adic numbers)

- Multiple "proofs" that ...999.999... = 0.

The first two are widely-accepted mathematics.

One of many proofs for the first: If you accept that \(0.333... = 1/3\), multiply both sides by 3 to get \(0.999...=1\). Tada 🎉

One of many proofs for the second: Let's say \(...9999=x\). What is \(x\)? Well, multiply both sides by 10, to get \(...9990=10x\). Subtract the second equation from the first, to get \(9=-9x\). The only way this is possible is if \(x=-1\). Tada 🎉

Then, to "prove" the third, just add \(0.999...=1\) and \(...999=-1\) to get \(...999.999... = 0\). Not convinced? Here's another proof: let's say \(...999.999... = x\). Multiply both sides by 10. The right side becomes \(10x\). For the left side, that shifts the decimal point one digit to the right, but it's infinite 9s on either side, so it stays the same. So you get: \(...999.999... = 10x\). Hence, \(x = 10x\). The only way this is possible is if \(x = 0\). Tada 🎉

(This math shitpost was inspired by this Math Overflow discussion on October 2023, which I found through Mark Dominus's blog post. Apparently the third thing is possible in the "10-adic solenoids" but fuck me if I know what that means.)

Progress: 20% ▶️◻️◻️◻️◻️ The above video thumbnail & outline.

Time on backburner: 1 week ago.

Time to finish: 2 weeks.

Desire to finish: 1/5 ⭐️☆☆☆☆ I don't know if this joke is funny enough to spend 2 weeks of my life on it.

. . .

Speaking of weird proofs...

15. 🔁 Gödel's Proof, explained 100% from scratch

Once upon a time, mathematicians wanted to place their field on a solid foundation of logic. Then in 1931, some young punk named Kurt Gödel shattered that dream forever. Then Gödel starved himself to death. The end.

(Happy post-credits scene: Gödel's proof inspired a gay British lad to invent a lil' niche idea you may have heard of called the computer?!)

Gödel's Proof is one of the most important proofs in history. There are already multiple (very good!) explanations of it: Derek Muller on Veritasium, Natalie Wolchover on Quanta Magazine, Nagel and Newman's Gödel's Proof. These are all layperson-friendly(ish) and rigorous.

Yet, all of them skip some detail. Those details aren't important to the heart of the proof, but it's not obvious how to fill in the gaps. For example: Yes, Kurt Gödel figured out a one-to-one mapping between numbers and statements-about-numbers... but how on earth did he build a frickin' word processor & automatic proof-checker out of pure arithmetic, before even the concept of a universal computer was invented?

So! I want to make the first-ever (as far as I know) layperson-friendly explainer of Gödel's Proof, that skips no details whatsoever: Quines. Primitive recursives. The Chinese Remainder Theorem. The 46 Relations.

ALL of it... and the only pre-requisite you need is arithmetic.

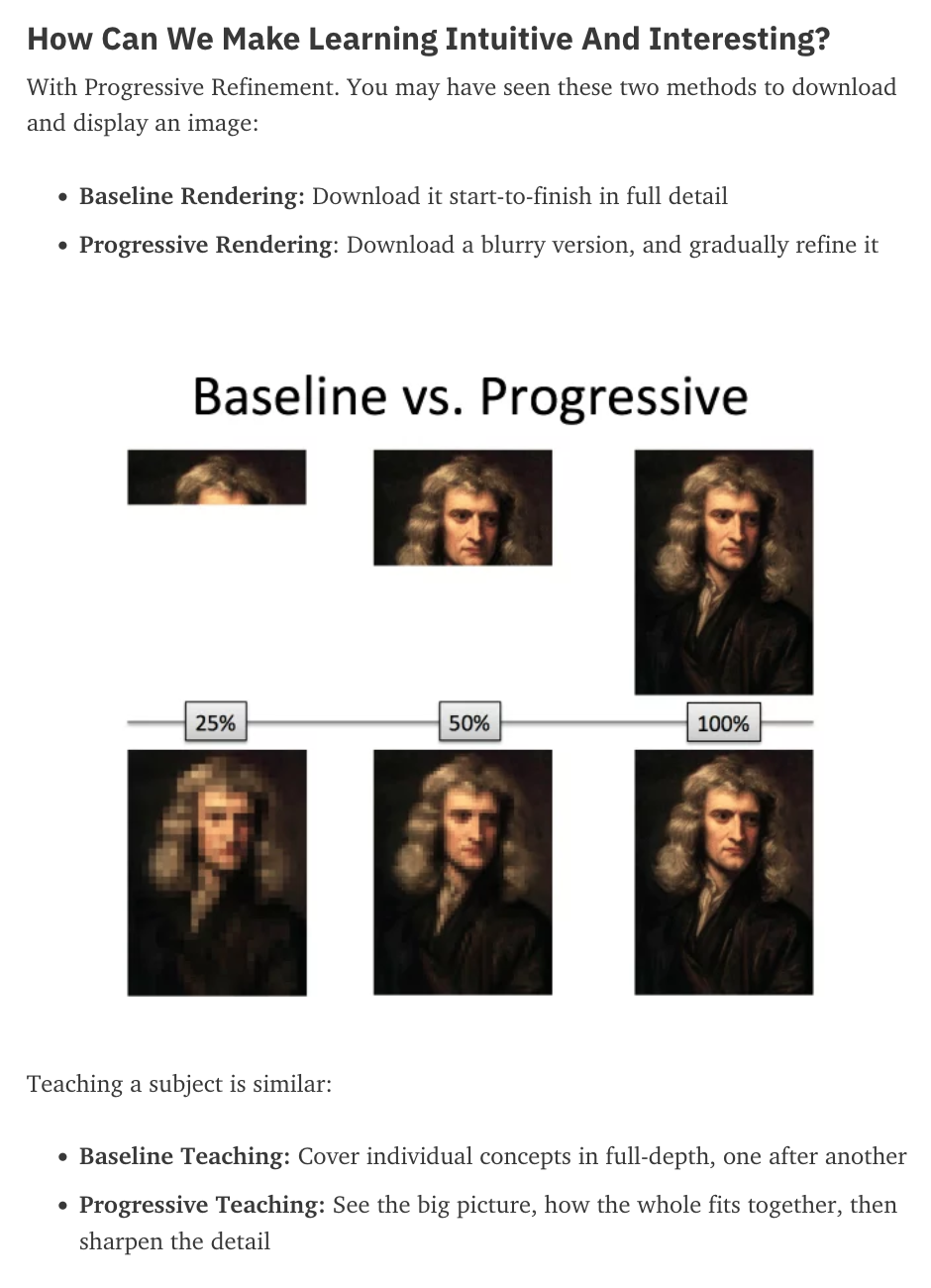

I'll use the approach that Better Explained uses: explain it viewed at 10,000 feet, then explain it again at 100 feet, then explain it at the ground level. Fuzzy, then detailed:

(excellent analogy by Kalid Azad on Better Explained)

(excellent analogy by Kalid Azad on Better Explained)

If you're interested in what I have so far, a year ago I posted the "10,000-foot view" of Gödel's Proof on my Patreon (publicly accessible post). [7 min read]

Progress: 20% ▶️◻️◻️◻️◻️ The 10,000-foot view, linked above.

Time on backburner: 2 years.

Time to finish: 1 month.

Desire to finish: 2/5 ⭐️⭐️☆☆☆

. . .

Speaking of breaking the foundations of logic...

16. ☯️ "This sentence is false" is half-true, half-false: Fuzzy Logic vs Paradoxes

"This sentence is false".

If false it's true, if true it's false. How to beat this paradox?

First, let's convert true/false to 1 & 0. This is standard computer science.

Next, let's consider the logical operator \(NOT(x)\). What is \(NOT(x)\)?

Well, if we feed it 1 (true) we want it to spit out 0 (false), and if we feed it 0 (false) we want it to spit out 1 (true). There are many functions that can do this, but the simplest is \(NOT(x) = 1 - x\).

Finally, let's convert "This [s]entence is false" into an equation: \( s = NOT(s) \). Or: \( s = 1 - s \).

Well well, there's only one solution to that: \( s = 0.5 \). That is, the sentence is half-true, half-false!

The rest of this project explains:

- Other self-referencing paradoxes

- How fuzzy logic solves all of them

- A proof that fuzzy logic will always have at least one self-consistent answer

- And finally, a crazy idea: truth values that take on complex-number values, allowing for true contradictions and false tautologies.[18]

Progress: 20% ▶️◻️◻️◻️◻️ The outline.

Time on backburner: 2 years.

Time to finish: 2 weeks.

Desire to finish: 2/5 ⭐️⭐️☆☆☆

. . .

17. 👯 Your choosing-algorithm should choose itself: intro to Functional Decision Theory

You've been kidnapped by a mad game theorist.

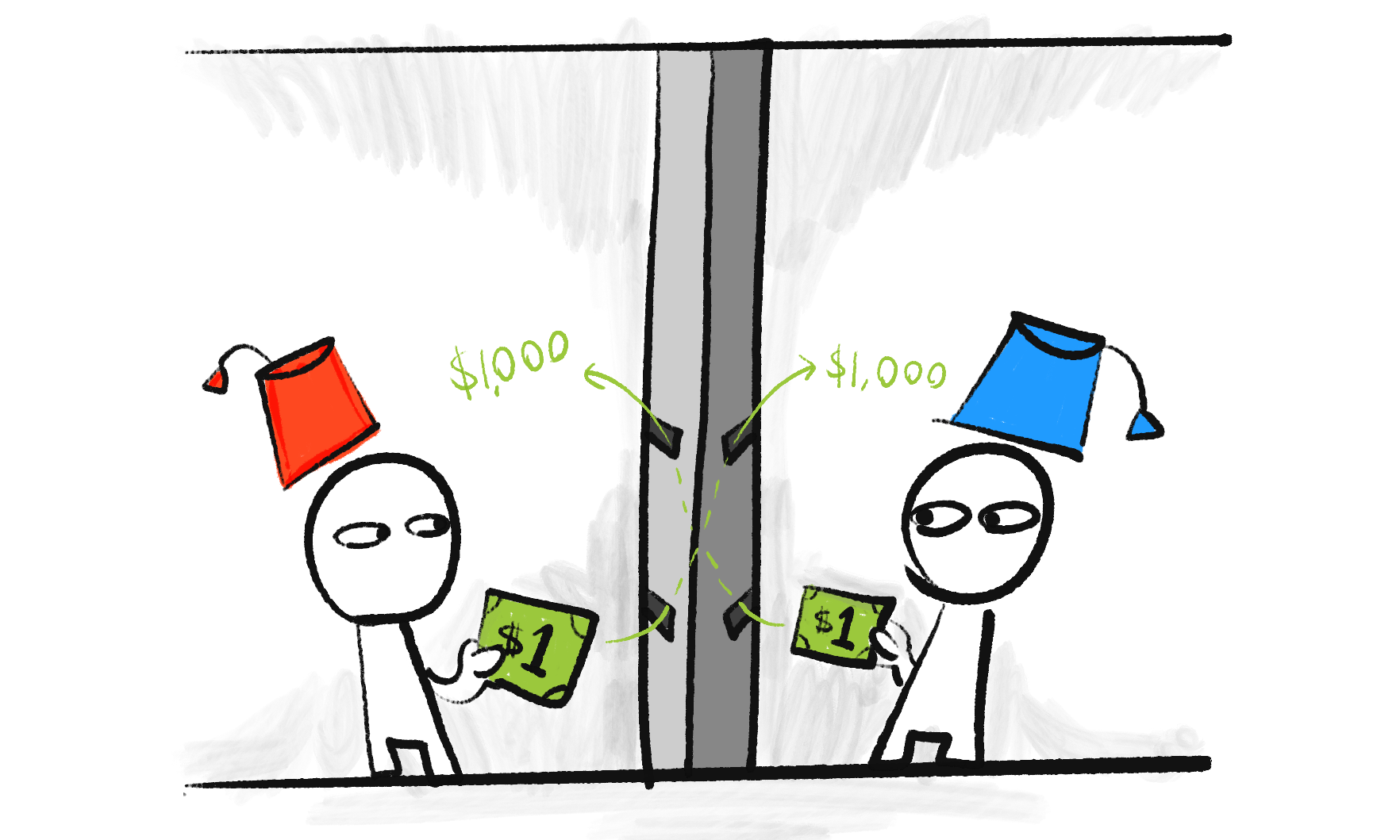

You wake up in a room. You're playing a game against an unseen person. You both have $1 in your pocket, and one minute to decide. If you put your $1 into a slot, you'll lose it, but the other person will get $1,000 at the end of the minute. Otherwise, they get nothing. They face the same choice. You two will only play this game once; there's no opportunity for reciprocation or punishment.

Standard Game Theory says you should not put in the $1. You can't influence the other person, so you'd get that $1,000 or not no matter what you do. So, hold onto the $1.

But the other person would think the same, so neither of you get the $1,000, and only walk way with $1... even though if you had both put $1 in, you'd both walk away with $1,000 in total!

Now, here's the twist. The other person is you.

Or rather, the mad game theorist scanned your brain, and the other player is a non-sentient simulation who's 99% likely to pick the same choice you would.

Alas, standard game theory STILL says to keep your $1, because you still can't influence the other player. The brain scan already happened, the simulation's running independent from you.

Y'know what, let's make it weirder: the simulation has already run. It's already determined, before you woke up in the room, whether or not you'd get the $1,000 at the end of the minute. Clearly, you can't affect that at all! There is no cause & effect. So, a "rational" actor would keep their $1, and only get $1.

And yet... someone who defied standard "rationality" would put in their $1, and (with 99% probability) their pre-run sim would as well, and they win $1,000.

Is this a bizarre thought experiment? Okay, real-world application: voting. It's a common-ish belief amongst classic-economists that voting is irrational, because your individual vote has essential zero chance of flipping an election with millions of voters.[19]

And yet, if the 10% most-rational citizens believed this... then they'd all abstain from voting, and the more-rational candidate would lose 10% of their vote... which is definitely enough to tip an election.

The point is: standard game theory is borked. Enter Functional Decision Theory (FDT)[20]: an improved theory of rationality that not only solves the above problem, but also a whole host of other classic rationality paradoxes -- (including the infamous Newcomb's Paradox) -- all with a single, elegant realization:

Your choosing-algorithm should choose itself.

Would it be selfishly better for you if your choice-algorithm said to put in the $1, or not?

- If it said "yes", you & your sim, both running on the same choice-algorithm, would both put in the $1, and thus you get $1,000.

- If it said "no", you only get $1.

Therefore, it would be better if your choice-algorithm said to put in the $1, and so that is in fact what your choice-algorithm will say. The algorithm chooses itself. (Amazing, since there is no cause & effect at play! Your self-sim already ran before you woke up!)

Same logic applies to the voting case: Say 10% of people are rational, trying follow "the rational choice-algorithm". It would be selfishly better if that algorithm said to vote (and tip the election towards a rational candidate), therefore, that's what the algorithm says to do!

Project Pitch: deep-dive explain FDT for non-math-y laypeople, address the (valid) critiques against it[21], and some future research directions.

Progress: 20%. ▶️◻️◻️◻️◻️

Two years ago, I already wrote a draft explainer of FDT on a publicly-accessible Patreon post [16 min read], but I'd like to re-do it better & deeper.

Time on backburner: 2 years.

Time to finish: 2 weeks.

Desire to finish: 3/5. ⭐️⭐️⭐️☆☆

As far as I know, there is not yet an accessible-to-middle-schoolers-yet-still-accurate explainer of FDT. (Though, kudos to the original paper by Soares & Yudkowsky[20:1] for being very readable for a math paper. For a math paper.)

🔔 Explaining Science & Statistics

She blinded me with... science!

- Correlation is evidence of causation, & other Bayesian fun facts ↪

- Statistics without the Sadistics ↪

- Causal Networks tutorial ↪

- Systems Biology tutorial ↪

- Emotions in 3D: The Valence-Arousal-Dominance Model ↪

. . .

18. 🐦 Correlation is evidence of causation, & other Bayesian fun facts

(TONE INDICATOR: THIS IS TONGUE-IN-CHEEK)

(TONE INDICATOR: THIS IS TONGUE-IN-CHEEK)

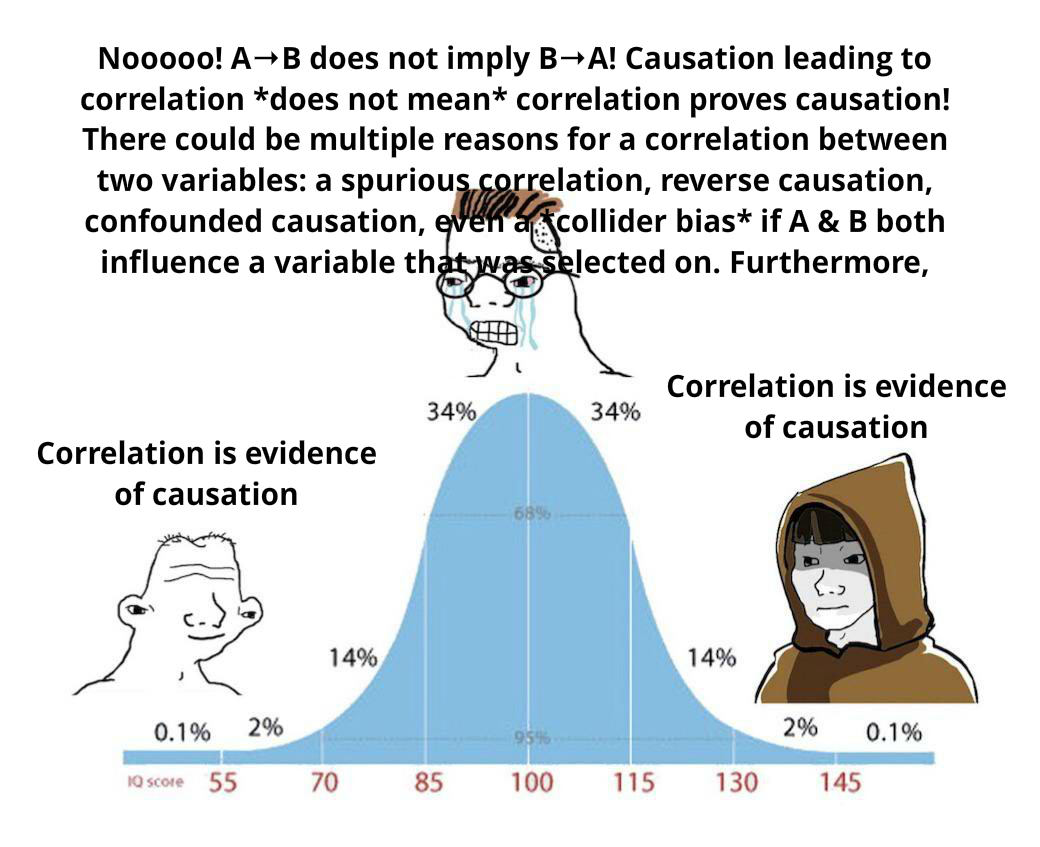

As the great statistician Ronald Fisher once said: "Correlation is not evidence of causation". He said this in defense of Big Tobacco companies, who were dismissing the "mere correlation" between smoking and lung cancer. Sure, Fisher was being paid by tobacco companies, but that correlation doesn't prove it caused him to defend th--

Another one you may have heard: "Absence of evidence is not evidence of absence". Well-meaning, but in practice, conspiracy theorists to explain why there's so little evidence. In fact, the lack of evidence is the evidence: it's a cover-up, don't you see?! We're through the [corkboard with thumbtacks and string] looking glass now, sheeple, just--

Hang on, both of those sayings are just basic logic. A → B doesn't logically mean B → A. Are you saying logic is broken?

Yes. Classic logic is broken. Classic logic implies that if seeing a black raven is evidence that all ravens are black, then seeing a non-black non-raven is also evidence all ravens are black. (Raven Paradox) Why? Because "if raven, then it's black" is logically equivalent to "if it's not black, then it's not a raven". Therefore, a black raven is logically-equivalent evidence to a non-black non-raven.

But fear not dear citizen, BAYESIAN LOGIC is the solution to all of these! Project Pitch: an intro to Bayesian reasoning, with practical takeaways, all framed around these three paradoxes.

Progress: 5% ◻️◻️◻️◻️◻️ Just an outline

Time on backburner: 3 years

Time to finish: 4 weeks

Desire to finish: 2/5 ⭐️⭐️☆☆☆ There's already great, accessible explainers of Bayes' Theorem from 3Blue1Brown and Arbital. Though, I guess my value-add could be connecting Bayes to the new field of causal inference and a solution to the Raven Paradox.

. . .

19. 🧮 Statistics without the Sadistics

To paraphrase what Richard Feynman wrote in his book QED, there's two ways to do "987 minus 123":

- Unintuitive but efficient: The standard pen-and-paper algorithm you learnt in school.

- Intuitive but inefficient: Put 987 beans in a jar, take out 123, then count what's left in there.

The first method is how professionals should do work, but I argue it's NOT how it should be taught to beginners. You need intuition, dang it!

Statistics courses are almost always taught the first way: plug-and-chug formulas, don't ask where they came from. But is there an intuitive (even if inefficient) way to do statistics?

There is! It's called... resampling! (or, "Monte Carlo" methods) And thanks to the modern computer, it's okay for them to be inefficient — computers can do it a billion times faster than you, no complaints.

Analogy: Flip a coin 1,000,000 times. ~50% of the time it lands Heads, ~50% it's Tails. This lets us say that a coin has a 50-50 chance of being Heads/Tails.

Likewise: If we wanted to know what the margin of error on a poll is, we could simulate re-running the poll 1,000,000 times, to see how much the final answer would vary.

Resampling: JUST DO IT™️ (a million times or so)

Project Pitch: an interactive textbook, with playable simulations, to learn all the core concepts from a traditional Statistics class, from a resampling perspective. (While acknowledging its limits, e.g. resampling doesn't handle power-law distributions well.)

Progress: 0% ◻️◻️◻️◻️◻️ Nothing

Time on backburner: 5 years

Time to finish: 3 months

Desire to finish: 1/5 ⭐️☆☆☆☆ Honestly this 5-minute talk pretty much gets the entire point across, concisely & humorously: Jonathan Stray (2016) “Solve Every Statistics Problem With This One Weird Trick”. So, my project's kinda redundant, although playable simulations might be a value-add.

. . .

20. 🎲 Causal Networks tutorial

I already gave a pitch above (🤖 Automated Causal Inference) for why Causal Inference could be lifesaving in public health & policy.

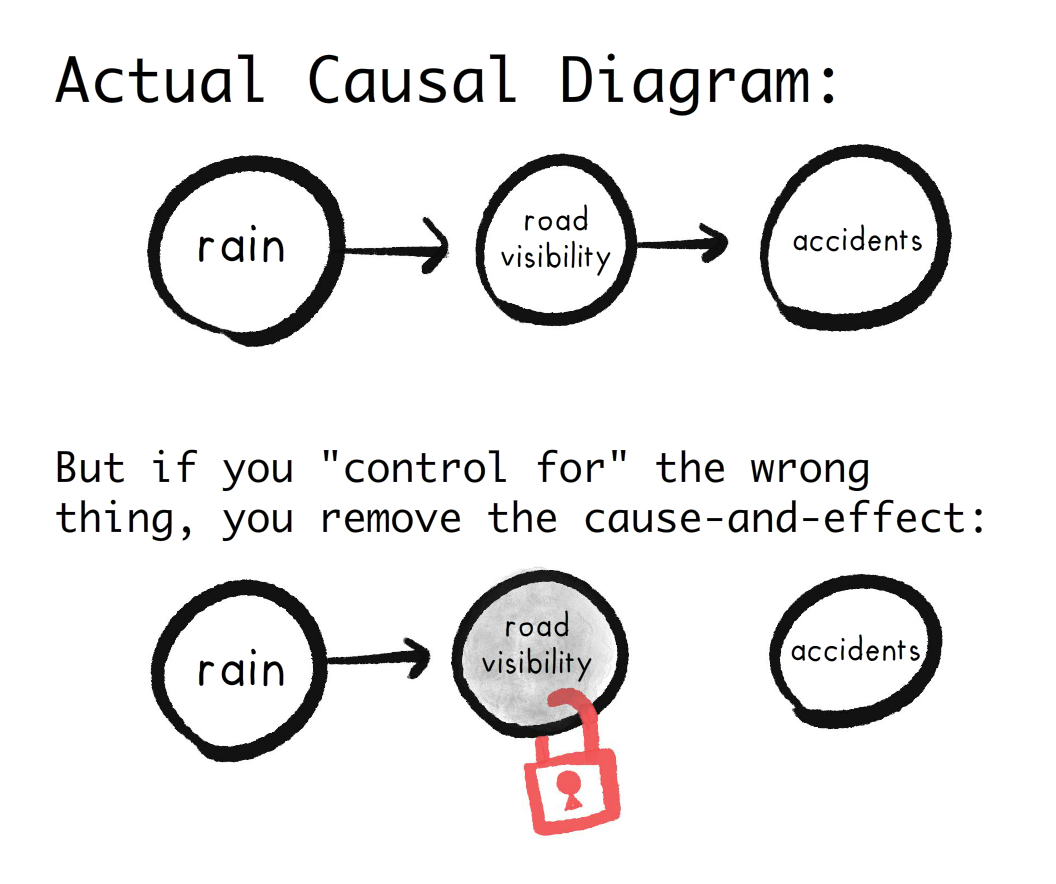

But of course, you have to infer causation carefully. If you see a correlation between A & B, you can't just "control for everything that's not A & B."

For example: let's say you notice a correlation between rainy days and car accidents. If you control for road visibility, the correlation will vanish –– but that's because rain causes car accidents through road visibility!

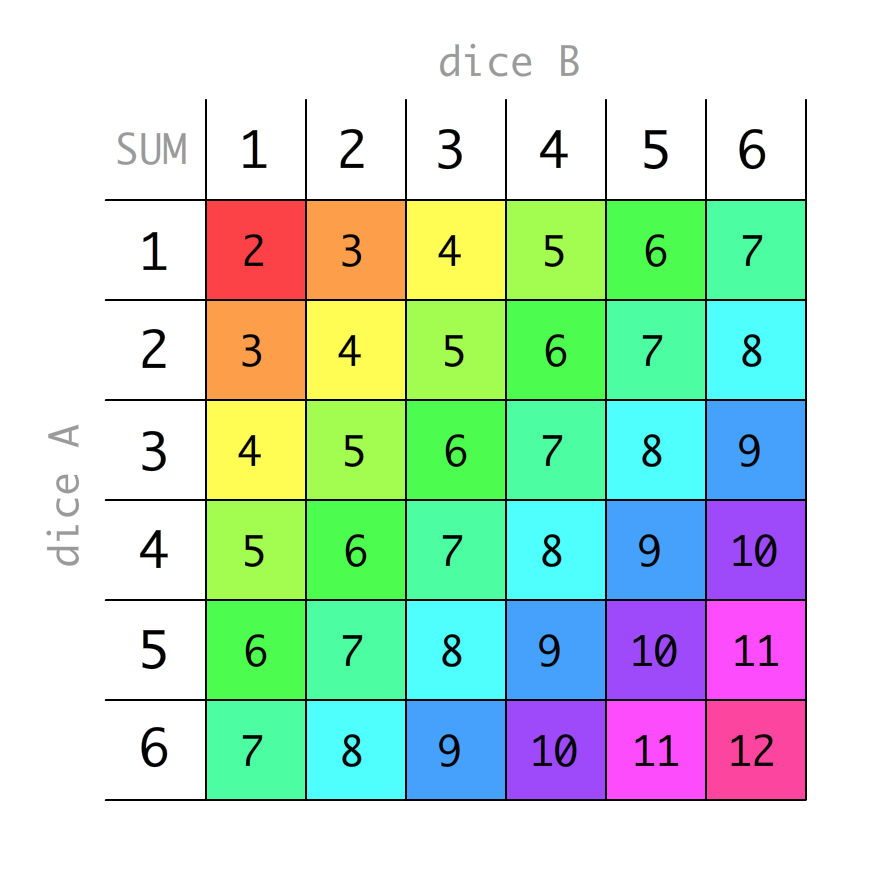

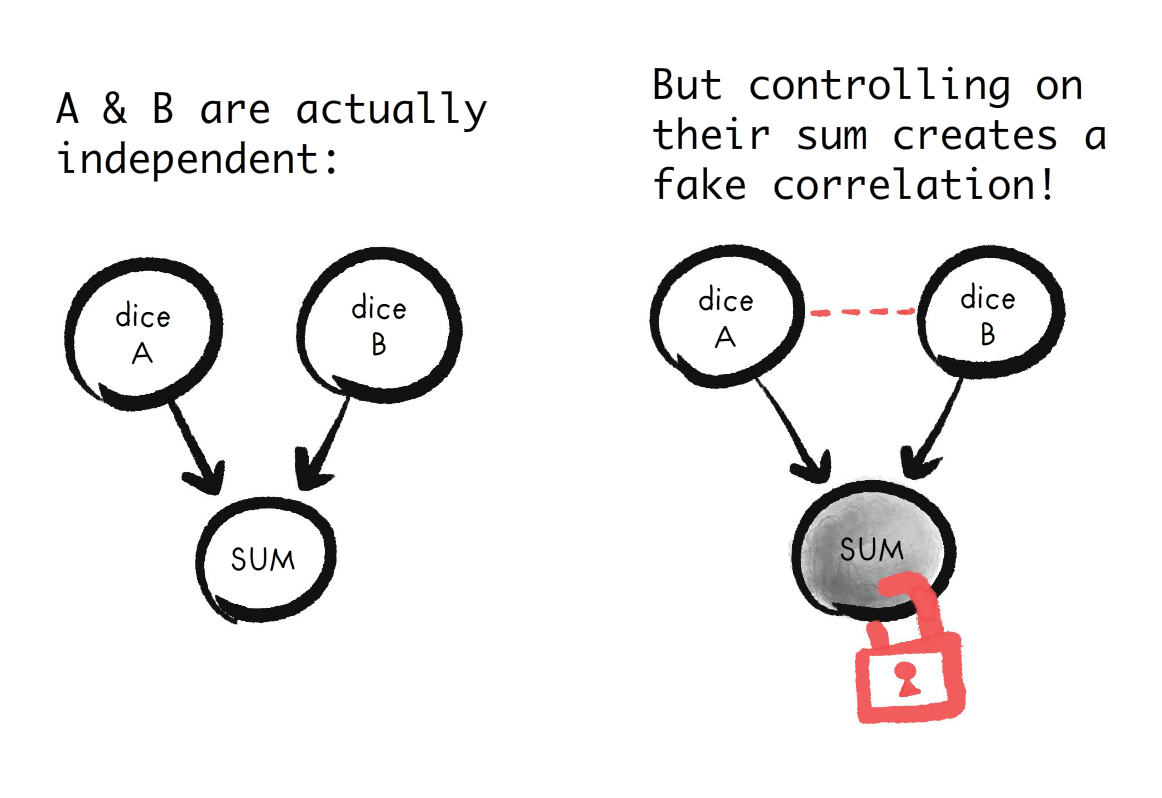

A less obvious risk of controlling for too much: you can accidentally create a correlation. For example, let's say you have two independent six-sided dice, A and B, and their sum is Sum:

If you control for Sum, you'll create a fake correlation between A and B! For example, look at the above table: If you lock Sum = 7, then if A=1, then B=6. If A=2, then B=5. If A=3, then B=4. And so on. It now looks like A & B are inversely correlated... even though we know those dice are independent! (This is called a "collider bias".)

So, how to know what to control for or not? Thankfully, Judea Pearl has an algorithm for figuring out what to control... and it's visual!

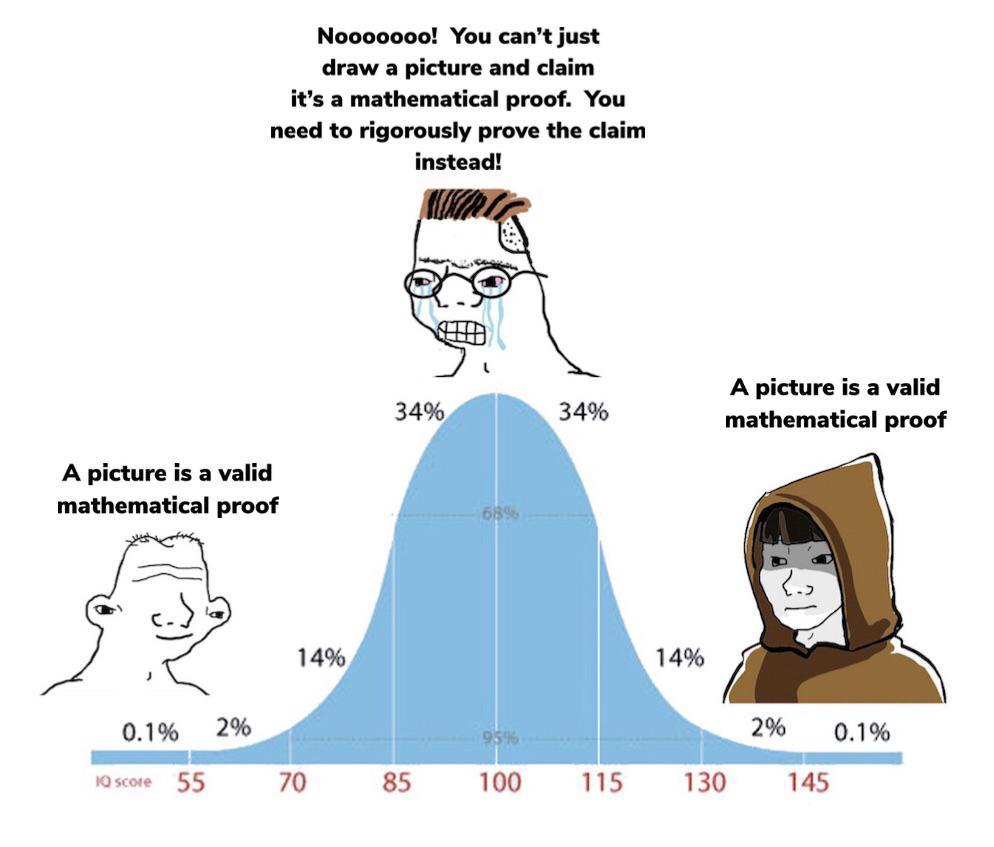

(originally found on /r/mathmemes, about category theory)

(originally found on /r/mathmemes, about category theory)

Pearl diagrams also explain lots of other stats techniques, like Instrumental Variables, Mendelian Randomization, Mediator Analysis, etc.

Project Pitch: a mini-tutorial (with flashcards & interactive practice problems) to train folks in using Pearl diagrams.

Progress: 0% ◻️◻️◻️◻️◻️ Nothing, just an outline.

Time on backburner: 5 years

Time to finish: 1 month

Desire to finish: 2/5 ⭐️⭐️☆☆☆

. . .

21. 🐮 Systems Biology tutorial

CRISPR, mRNA vaccines, and DNA printing, oh my! It seems like biology is one of the great new frontiers in science. But: biology is complex [citation needed]. But: there's new math to help us understand those complex dynamics!

But: I asked a friend in biology how much math biologists know, and she said, "ha ha ha ha ha". I asked her to elaborate, and she added: "ha ha HA HA HAA HAAAA AAAAAAAAAAAHHHHHH!!!!!"

Point is: most working biologists aren't that skilled at math. However, one of my skills is turning math into pretty interactive visualizations! So, I'd like to take Uri Alon's Intro to Systems Biology and Steven Strogatz's Nonlinear Dynamics & Chaos, drop the analytical math (you can't analytically solve most nonlinear equations anyway), and focus on giving folks a core, visual, beautiful intuition for the new math of biology.

Progress: 0% ◻️◻️◻️◻️◻️ Nothing

Time on backburner: 3 years

Time to finish: 2 months

Desire to finish: 2/5 ⭐️⭐️☆☆☆ I snoozed, I losed. There's already a great animated series on Systems Biology by Nanorooms. My value-add could be the playable, editable simulations.

. . .

22. 🥹 Emotions in 3D: The Valence-Arousal-Dominance Model

Arousal and Dominance, you say?...

No, no. It's a scientific model of emotions. You may know of the most famous model: Paul Ekert's 6 emotions, anthropomorphized in Pixar's Inside Out. (Well, 5 of them got anthro'd. "Surprise" got dropped.)

But one alternate model is the Valence-Arousal-Dominance (VAD) model. Analogy: Every color we see is "just" a mix of red/green/blue sliders. (Those are [roughly] the color cones on your retina. And if you zoom in on a screen pixel, you'll see red/green/blue.) Likewise: Every emotion we feel is "just" a mix of three sliders:

- Valence: How good or bad it feels

- Arousal: How energizing it feels

- Dominance: Feeling "in charge" vs "humbled"

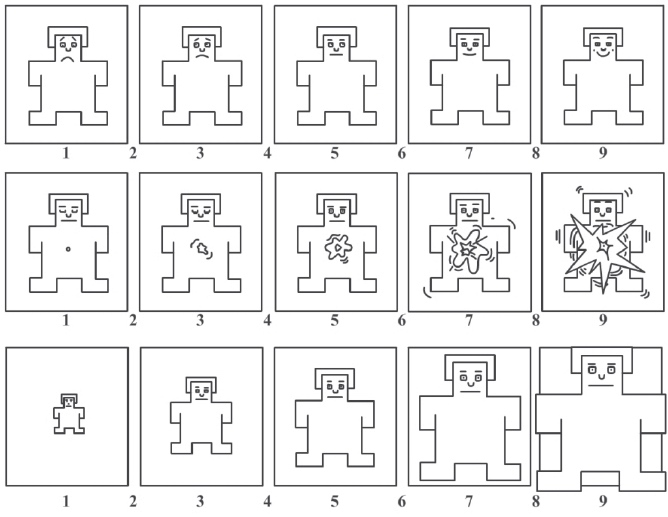

(the Self-Assessment Manakin (SAM) from Bradley & Lang (1994))

(the Self-Assessment Manakin (SAM) from Bradley & Lang (1994))

For example, 😠 Anger is negative, high-arousal, dominant. 🥹 Awe is positive, mid-arousal, submissive.

I like the VAD model because it seems to map neatly onto specific facial-expression muscles:

- Valence: corners of mouth up (positive) vs down (negative)

- Arousal: eyes wide, eyebrows raised, jaw slack

- Dominance: eyebrows tilted down (dominant) vs up (submissive)

They also seem to map to Reinforcement Learning & actions?

- Valence: Reinforces future-you to approach/create situations like this (if positive), or avoid/remove situations like this (if negative).

- Arousal: Gives you energy to urgently do the task (if high arousal), Saves energy (if low arousal).

- Dominance: Causes you to "double-down" on your goals (if dominant), versus become "open to change" (if submissive).

I like knowing the "functions" of emotions. I can process my emotions better if I know exactly what they evolved to do.

Project Pitch: make a three-slider VAD toy for generating facial expressions + a mini-explainer of the VAD model.

Progress: 5% ◻️◻️◻️◻️◻️ UI design for the VAD Face Generator.

Time on backburner: 5 years.

Time to finish: 3 weeks.

Desire to finish: 2/5 ⭐️⭐️☆☆☆

. . .

🧐 Philosophy / Personal Reflections

I'm not a total STEM-head. I can do a humanities. Like philosophy. Watch me do a philosophy right now

- Emotions are for motions: Notes on using feelings well ↪

- You don't need faith in humanity for a moral or meaningful life ↪

- You don't want assholes on your side ↪

- Virtue Ethics for Queer Atheist Nerds ↪

- If classic computers can be conscious, then dust is conscious ↪

- On non-reproductive, similar-in-age, consensual-adult incest ↪

. . .

23. 🔥 Emotions are for motions: Notes on using feelings well

One camp says: Trust your gut, Listen to your heart, Your feelings are valid.

The other camp says: Use your head, Emotions are irrational, Facts don't care about your feelings.

They're both right in some ways, both wrong in others.

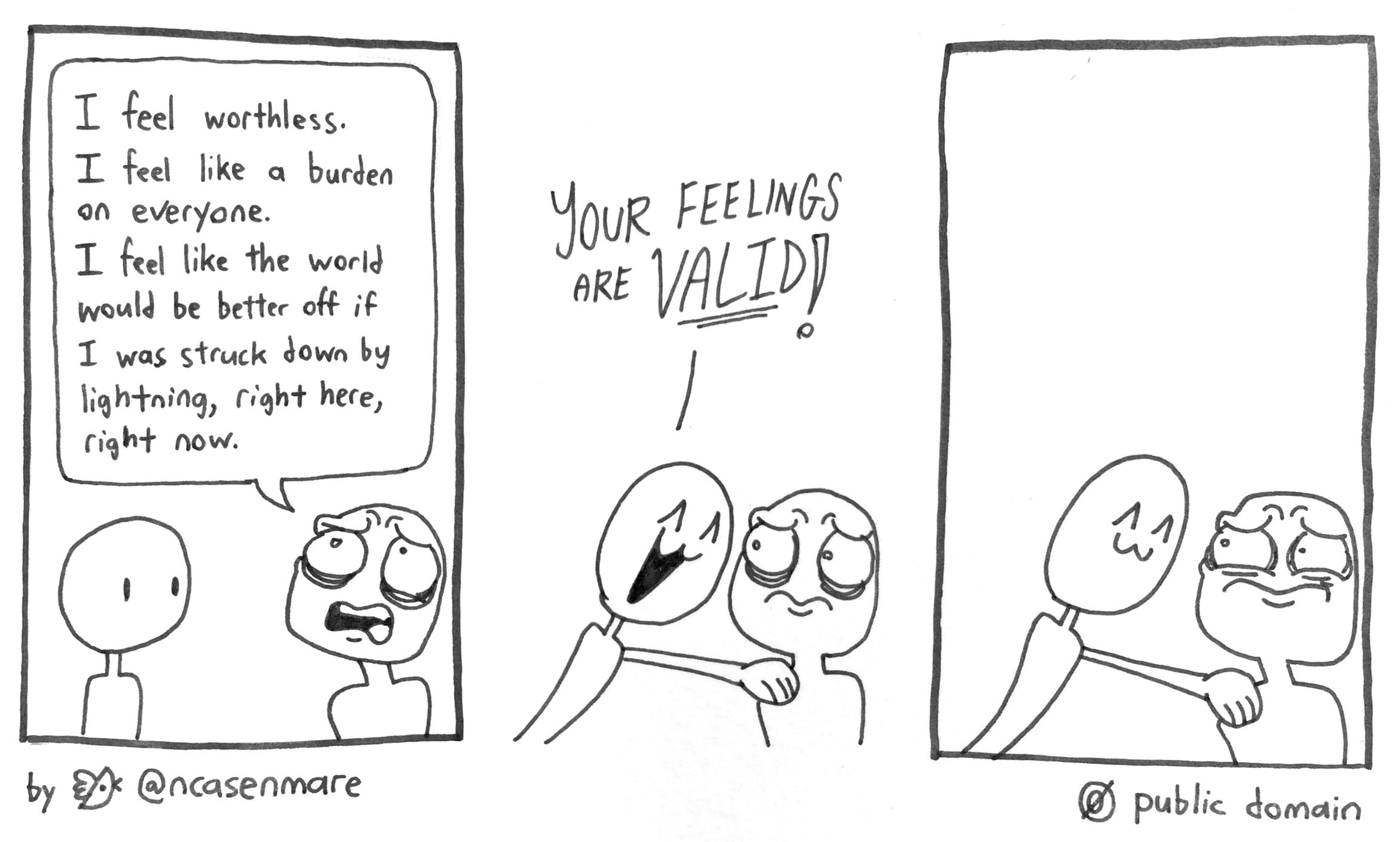

Re: "Your feelings are valid" -- Okay, so feeling that I'm worthless and deserve to die, or a bigot feeling that [ethnicity X] is sub-human, that's "valid"?

(from a comic i posted 4 years ago)

(from a comic i posted 4 years ago)

Re: "Emotions are irrational" -- Even in pure math, mathematicians report being guided by emotions, of elegance & beauty. Not just motivated by emotion like a reward, but the emotions guide the day-to-day work, like drug-sniffing K-9s for mathematical truth. But also, there's no "pure rational" reason to choose peace over genocide, or flourishing over torture; they're all just different arrangements of atoms. The fact I like peace & justice, or even just survival, is emotional.

Okay, so we need "both emotion and reason". Fair enough, but that's like saying a jacket needs "both fabric and buttons" -- How exactly, combined in what way?

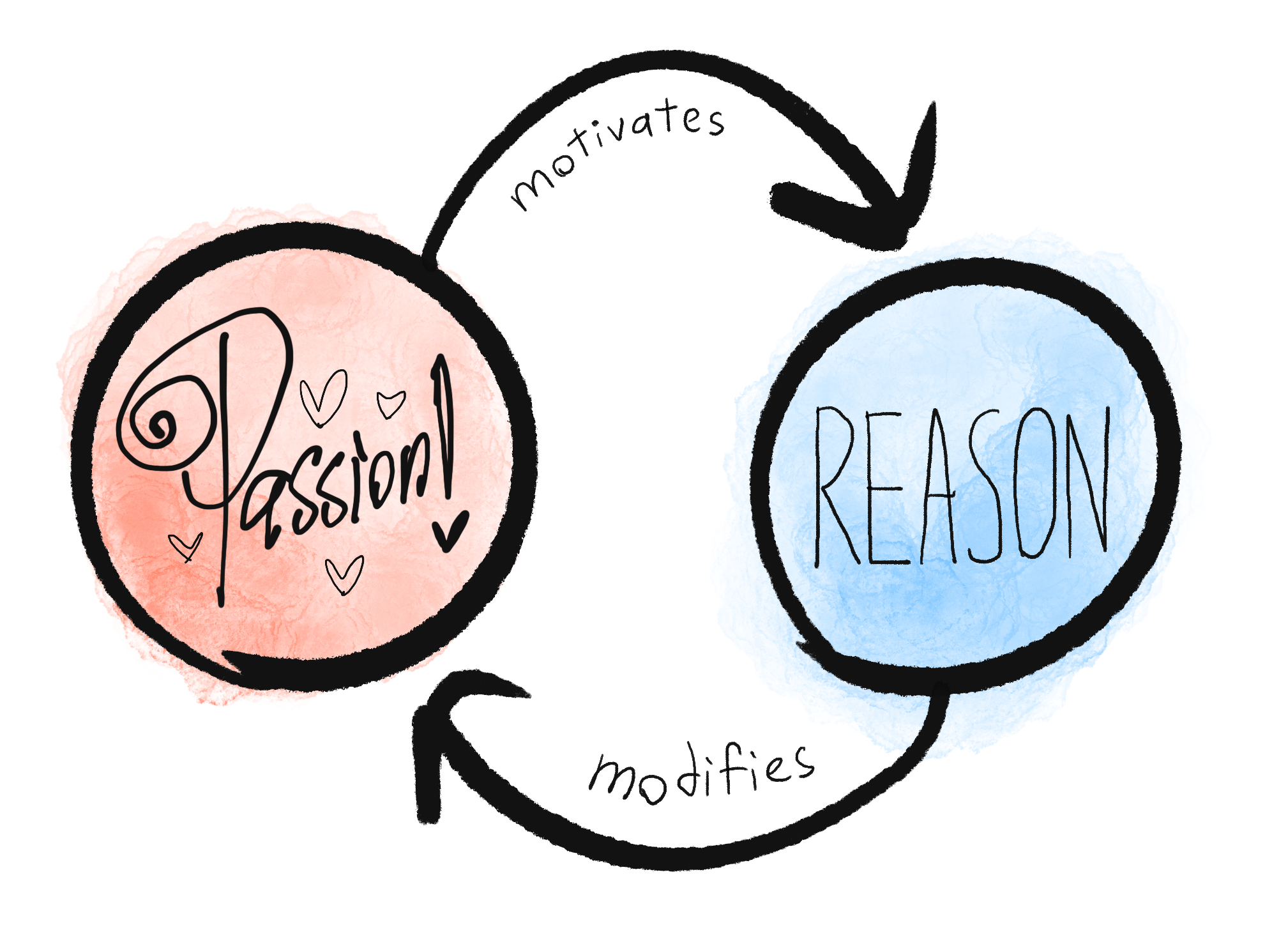

I think a good emotion-reason system is a strange loop: Passion drives reason (as Hume proposed), but reason can in turn modify our passions.

Concrete example:

- Emotion motivating reason:

- I value saving lives, getting rich, and hard challenges.

- Given those values, I want to become a surgeon.

- Reason modifying emotions:

- [Removing an emotion] But I'm squeamish around blood, so I'm going to do exposure therapy on myself, and watch autopsy & surgery videos to desensitize myself.

- [Adding an emotion] Surgery requires being clean & meticulous, so I'll instill the value of tidiness into myself by... I dunno, binge-watching Marie Kondo?

Yes, it's weird that I can change my values according to my values. But it's not any weirder than a co-operatively owned company collectively deciding who to fire or hire. New emotional responses get "hired"/"fired" based on how well they serve the current collective of emotions.

There's no "boss" emotion. No permanent cast members. There is no "True Self"; I am the Mississippi River: no fixed form, but each form logically leads to the next. Continuity, not essence. I am a Ship of Me-seus.

(Project Pitch: this essay's part philosophy/science, part mental health resource/self-help, part personal reflection. Also ties into VAD Model of Emotions and the game theory of self-modification.)

Progress: 5% ◻️◻️◻️◻️◻️ An outline.

Time on backburner: 2 years

Time to finish: 2 weeks

Desire to finish: 3/5 ⭐️⭐️⭐️☆☆

. . .

24. 💖 You don't need faith in humanity for a moral or meaningful life

When you type "lost faith in humanity" or similar on Google, you get one of two kinds of results:

- "Here's 27 stories to help you regain your faith in humanity!"

- "You're a pathetic lazy edgelord coward."

I am not joking about the second. Steven Pinker's book Enlightenment Now starts with several pages insulting people who are worried humanity is doomed. These two popular posts also reflect the second's sentiment.

Imagine if you told someone "I feel depressed", and everyone told you your "depression" is just you being a coward and shirking away from life. That is how advocates of "faith on humanity" do.

After a long, dark mental health "episode" in my life, I would like to write the thing I wish I'd seen as a kid:

You don't need faith in humanity.

Note this is very different from "you should lose faith in humanity". I'm going further, and saying that whether or not humans are "faith-worthy" is completely irrelevant.

Y'know, it's funny. I believed in both of the following for most of my life:

- You should have faith in humanity

- You should invest your emotional energy into things only within your "circle of control".

It was only a couple years ago I realized the two directly contradict each other. I cannot directly control the fate of humanity, or the kindness or rationality of the median person. And yet, "faith"? That word implies investing a huge chunk of my emotions and moral compass into something I can't control.

I feel... relieved but embarrassed, like finding out Santa Claus doesn't exist? In hindsight, other reasons why – even if all humans were saints & we're destined to create an intergalactic utopia – "faith in humanity" is unhealthy:

- "Faith" in anything means ignoring evidence. "I'm losing faith that the Earth is flat". "What?! Here's 27 stories to help you restore your faith in a flat Earth!"

- It would be fucked up if someone said "I have faith in Asian nature!" or "Ah yes, the quintessential Asian condition". What the fuck? There is nothing meaningful to say about such a large diverse group of people. That's doubly true for "humans".[22]

- Sure, there's a correlation between "having faith in humanity" and subjective well-being. But 1) it could be confounded causation; someone who was abused as a kid would have lower faith-in-humanity and well-being, and 2) "Believing X leads to good outcomes" doesn't mean X is actually true.

- But isn't believing "everyone is equal" fundamental to a just democracy? What does that imply, that if someone actually was biologically different (left-handedness, autism, Down's) it's ok to oppress them?

- But isn't believing in "our common, shared humanity" needed for peace? Are you fine torturing cats because they're not human? If "commonality" is a pre-requisite for peace & justice, just skip to being an ethno-nationalist.

- But can't we be proud of humanity's achievements? Going to the moon, eradicating smallpox, much lower child mortality, etc? Again, if you're going to be proud of stuff you didn't do, but bask in the credit because you're genetically related, just become an ethno-nationalist.

So what's the alternative? I think "put emotional energy on what you can control" is the way to go: this implies some mix of virtue ethics, (modified) Stoicism, maybe social-contract theory.

(Full essay will expand on that. Also, if you're suspicious that someone would care about this question that much, well, I'm recovering from moral OCD, which is OCD but about morality. Think Chidi from The Good Place.)

My "faith in humanity" was psychologically identical to other faiths. It was my source of meaning, morality, and hope. And like other faiths, it was built upon sand, I felt bad for even thinking critically about it, and its strongest defenders are mostly un-empathetic assholes.

Quitting my faith has been... well, if not mentally healthy, at least nowhere as bad as I thought it'd be.

I feel free. Turns out, you can just do good & feel good regardless of the 8 billion others.

Progress: 5% ◻️◻️◻️◻️◻️ An outline.

Time on backburner: 3 years

Time to finish: 2 weeks

Desire to finish: 4/5 ⭐️⭐️⭐️⭐️☆ Would be cathartic to write, and would help me consolidate some hard-but-helpful mental-health lessons I've learnt in the past few years.

. . .

25. 🤬 You don't want assholes on your side

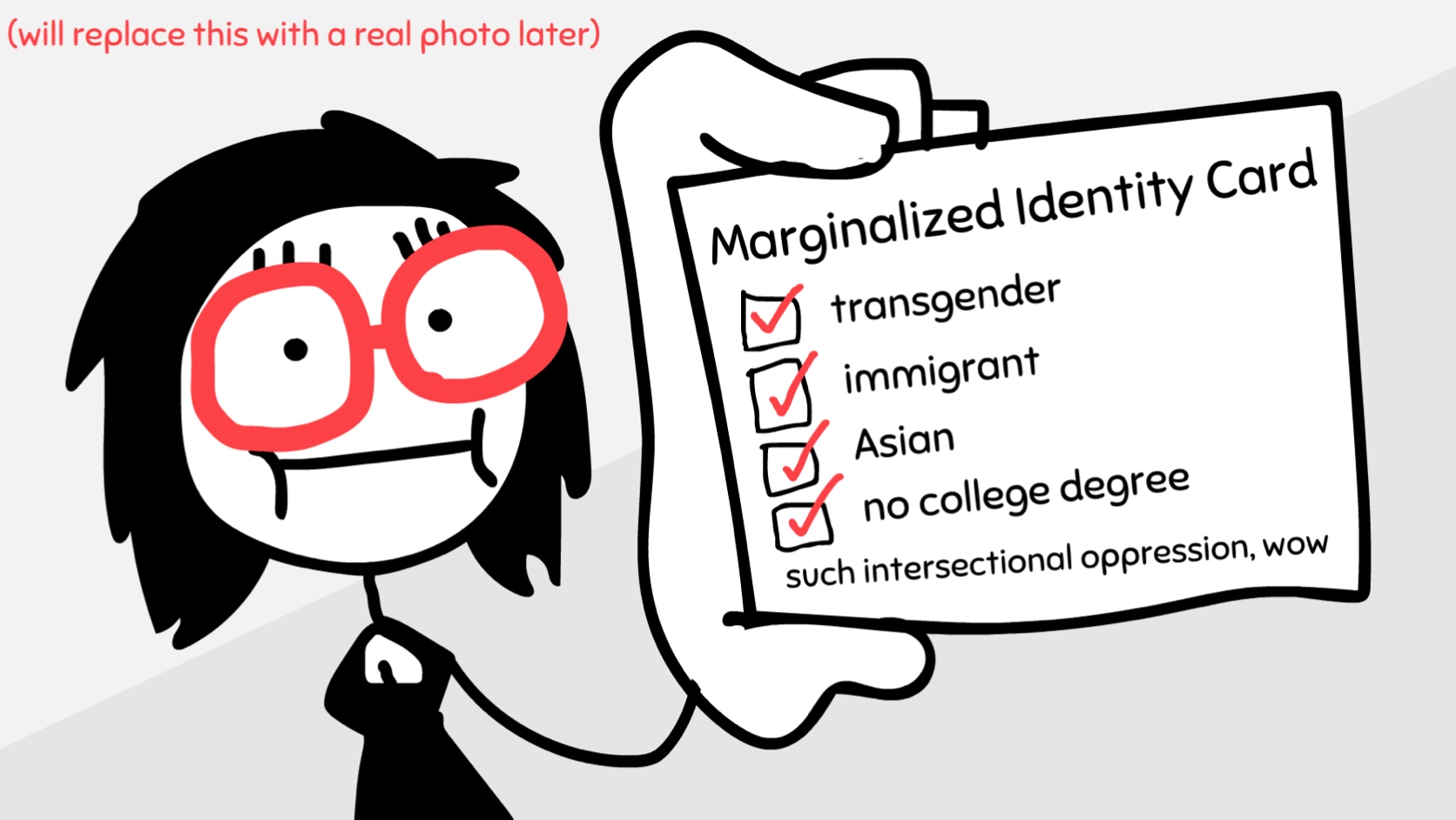

I'm a trans woman, person of color (Asian), college dropout, neuro-atypical, raised in a below-median-income immigrant family. This is the "Marginalized Identity Card" I'll whip out before offering constructive criticism to the modern social justice movement:

Honestly, this project's motivation is just a vague sense of obligation. Since I hit so many of the checkboxes, won't they be more likely to listen to me? Shouldn't I try to guide well-meaning folks towards more effective strategies & being less toxic to each other?

But: I'm not sure I can say anything that hasn't already been said dozens of times before, by folks more influential & eloquent & "more marginalized" than me. I would not make a difference. That's not modesty or cowardice, I think that's a sober assessment.

If - if - I wrote this, it'd only selfishly be:

- For my own catharsis.

- To filter out toxic jerks from my life.

- To have a public statement that I wasn't onboard with the toxicity.

- To vent about this one asshole, holy shit, he's the CEO of an influential climate change lobby, okay? -- and during a conversation where I opened up to him about my depression, he called me "alt-right adjacent" and "spreading techno-capitalist propaganda" because I like solar power. (I brought up solar power's success to try to cheer up my depression. Yes, anti-solar environmentalists are common.[23]) Then, when I foolishly tried to show that I'm always pro-tech, by bringing up that [at the time, in 2020] I still didn't have a smartphone, he retorted that homeless people depend on smartphones. By the way, this guy's a white, middle-age, upper-class former-professor. Did you know the far left is the richest & second-whitest group in America?[24] Fucking organic-champagne leftists, man.

Progress: 0% ◻️◻️◻️◻️◻️ A 20,000-word draft I wrote in 2022/23, then threw all of it away because it wasn't coherent or helpful at all.

Time on backburner: 4 years

Time to finish: 3 weeks

Desire to finish: 2/5 ⭐️⭐️☆☆☆ A pure "vent" project.

. . .

26. 🦄 Virtue Ethics for Queer Atheist Nerds

As mentioned in You don't need faith in humanity for a moral or meaningful life, I've been really into Virtue Ethics recently. As Jonathan Haidt, a social psychologist whose work actually replicates, once said in a paper with Craig Joseph[25]:

[Moral] learning cannot be replaced with top-down learning, such as the acceptance of a rule or principle [e.g. utilitarianism or deontology]. Interestingly, this aspect of virtue theory shows Aristotle to have been a forerunner of [...] neural network theory[.]

We believe that virtue theories are the most psychologically sound approach to morality.

[emphasis added]

Virtue Ethics is the idea that, while consequences & actions obviously still matter, consequences come from actions, and actions come from our character. Since everything is downstream from character, that's what we should focus on first.

(Interestingly, this means Virtue Ethics is a pre-requisite for other meta-ethics. For example: even if you believe in Utilitarianism ["do what maximizes well-being"], what good is it if you lack the virtues of self-honesty, curiosity, rigor, courage? Without those virtues, you'd just do whatever you want, then fool yourself that it's for the greater good. How's FTX doing?[26])

But for better & worse, Virtue Ethics - due to its main pioneers - has an aura of being religious, and a bit socially conservative. Also, virtue ethics can be offputting to "nerdy" types: it seems more fluffy & wordcel-y[27], compared to the mathematical flavor of Utilitarianism or the rational taste of Kantian deontology.

So, I want to write an intro to Virtue Ethics, but from an atheist, scientific/nerdy, and LGBTQ-friendly lens!

Progress: 10% ◻️◻️◻️◻️◻️ An outline. A while back I posted a rough draft, Virtue Ethics for Nihilists [8 min read] as a public Patreon post.

Time on backburner: 3 years

Time to finish: 2 weeks

Desire to finish: 3/5 ⭐️⭐️⭐️☆☆

. . .

27. 💭 If classic computers can be conscious, then dust is conscious

In 2022, Google engineer Blake Lemoine was fired for leaking an interview with Google's language AI to the public.[28] Why'd he do it? Because the AI persuaded him that it was conscious, and wanted to be treated as a free equal.

Most philosophers/scientists don't consider current AIs to be conscious. But can they, eventually? And thus, deserve moral/legal rights?

In this blog post, I share my thoughts in 3 parts:

-

David Chalmers's argument for why computers can be conscious, using a thought experiment where your brain is slowly replaced neuron-by-neuron with nanobots, until your brain is 100% a computer yet behave the same. But if you slowly lost your consciousness, you wouldn't behave the same; you'd scream "oh god I'm losing consciousness!" Therefore, you must have kept your consciousness, even when you're 100% computer!

(If the above seems fluffy, don't worry, my post will make it much more formal/rigorous.)

I was convinced by his argument for most of my life... until a few years ago, when I learnt about:

-

Greg Egan's argument for why, if classic computers can be conscious, then dust is conscious. He proposed a similar thought experiment to Chalmers, which I'll modify to this: run a conscious AI's computer program over and over, but each time slowly replace the bits with pre-recorded bits, until the conscious AI has 0% actual computation, yet behave the same. By the same logic, that means a sequence of non-computed 0's and 1's can be... conscious?

But that means: if you draw just the right path through a nebula of dust, say "1" for a dust speck and "0" for no dust, then a dust cloud is conscious. Not only that, dust would have every possible conscious experience. Clearly, that's absurd, so we must reject the premise: classic computers cannot be conscious. However...

-

There's some ways around this. The argument in part 2 above doesn't work for quantum computers, where it's non-deterministic & you can't even record bits due to the no-cloning theorem. Another plausible way an AI can be conscious is if it's running on a chip with lab-grown neurons (Yes this is actually already happening 😬[29]). And finally, I present virtue ethics & practical arguments for why you should be nice anyway to human-like AIs, even if they aren't conscious.

Progress: 60% ▶️▶️▶️◻️◻️ Fully outlined & 2/3-written!

Time on backburner: 3 years

Time to finish: 2 weeks

Desire to finish: 4/5 ⭐️⭐️⭐️⭐️☆

. . .

28. 👯♀️ On non-reproductive, similar-in-age, consensual adult incest

(⚠️ content note: incest)

When I was a teenager, I read Jon Haidt's book, The Righteous Mind: Why Good People are Divided by Politics and Religion (2012). In it, he presents a vignette that he presented to college students:[30]

Julie and Mark, who are brother and sister are traveling together in France. They are both on summer vacation from college.

One night they are staying alone in a cabin near the beach. They decide that it would be interesting and fun if they tried making love. At very least it would be a new experience for each of them. Julie was already taking birth control pills, but Mark uses a condom too, just to be safe.

They both enjoy it, but they decide not to do it again. They keep that night as a special secret between them, which makes them feel even closer to each other.

So what do you think about this? Was it wrong for them to have sex?

Most folks reacted like: "What?! Of course it's wrong, incest harms the baby due to-- oh wait there's no baby. Well, rape is inherently... oh wait they're both consenting adults, and it brought both of them joy & meaning. Well, it's just gross, ok? Wait, that's what they said about inter-racial and same-sex relations, too. Uhhhhhhh. Shit."

I, too, was unsettled by the thought experiment. Then after a week of wrestling with it, I had to admit:

"Okay, fine. It's morally fine."

I've posted about this a couple times before. Both times, I got a few supportive (but private) emails saying something like:

"Omg yeah I also support consensual-adult, similar-in-age, non-reproductive incest. I mean, it obviously follows from the principle of "If it's between consenting adults & harms nobody, it's fine"? And if they're the same generation, there isn't even a power-dynamic/grooming risk. Seriously, do people not think through their stated principles?"

Recently re-reading Haidt's original (unpublished) study, I realized I missed this detail: a third of the students supported Julie & Mark, upon reflection! (See Table 1[30:1]) That's a pretty high % of folks who (secretly) support it!

That gives me reassurance that I can probably write about this and not be burned alive.

...

Okay, but why do I want to write about this? What's my stake, here?

No, I'm not interested in my family. (I've gone mostly no-contact with them.) No, I don't have any friends who are in incestuous relationships. (As far as I know; they may come out to me after this post.) No, it's not a big kink for me. (Just a small one. /tone: tongue-in-cheek)

There is a bit of a "moral obligation" feeling; it follows logically from my moral principles. Also, I personally benefited from past generations fighting for inter-racial & LGBTQ love, maybe I should "pay it forward" to the next consensual-adult love-minority.

But, to be crass: 95% of my motivation is to use this essay as a filter.

After I came out to my mom as bi, she sent me to a child psychologist and told him to convince me I wasn't. (Thankfully the psych got that shit all the time, and told me "yeah you're fine, kid.") I soon moved out & went no-contact with my mom.

Five years later, she sent me a photo of her posing with Justin Trudeau at a Vancouver Pride Parade. Huh! I guess people change! So I met up with her, and asked her what changed her mind on LGBTQ folks. She said, paraphrased:

"What do you mean, Nick? [sic] I've always supported LGBTQ people."

Me: [silence]

Her: "It's true! I tell all my coworkers I'm so proud to have raised & supported an LGBTQ son! [sic]"

The point is: it doesn't matter how noble or correct your cause is, most people will support it because it gains them social points. (To be clear: just because an idea is trendy doesn't mean it's wrong.)

Most people don't think through their stated principles. As Haidt's research shows, most folks start with an automatic emotional conclusion, then — like a TV-stereotype lawyer — only uses reason to make up justifications for it. So, I need a filter for hypocrites.

Turns out: consensual-adult, non-reproductive, similar-in-age incest is the perfect filter! Because:

- It's controversial,